Neural Network and its applications: Difference between revisions

No edit summary |

No edit summary |

||

| (47 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

==Neural networks as a type of machine learning== | |||

There are dozens of machine learning methods, such as clustering, regression, statistical classification, and others. For different tasks there is a different optimal solution method. According to Alexander Kraynov, a Yandex specialist and head of the computer vision service, "neural networks are now the most fashionable and interesting method of machine learning, which wins in most complex tasks. | |||

<p>Neural networks are a type of machine learning algorithm. Based on the fact that this method of machine learning is most often used in solving problems and most of the information (manuals, manuals, Internet courses, lectures) is devoted to it, we can conclude that neural networks are currently the most common and therefore the most popular type of machine learning. That is why it makes sense to study machine learning methods using neural networks as an example. <ref name="title">http://machinelearning.ru/wiki/index.php?title</ref></p> | |||

===Principle of biological neural networks=== | |||

In their essence and structure artificial neural networks resemble the structure of the human nervous system, where each neuron either transmits an electrical charge (signal - action potential) to a neighboring neuron or not. In the article "How does our brain work or how to model the soul?" the author believes it is correct to represent the neuron's work not just as a calculator, but rather as an excitation retransmitter, which chooses the direction and strength of excitation propagation. | |||

The structure of neurons and neural networks in the human brain is much more complex than artificial ones. | |||

[[File:4nn.png|450px|thumb|Figure 1. Mathematical model of a biological neuron.]] | |||

The main parts of a neuronal cell responsible for signal reception and transmission are the membrane, dendrites, synapses, and axons. The membrane surrounds the cell nucleus. At the ends of dendrites there are synapses - contact points between two neurons. Axon conducts excitation from neuron body to neuron. If we maximally simplify the formulation of neuron components' functions, the neuron membrane is a receiver, and synapses on dendrites are signal transmitters. | |||

Previously, it was thought that the neuron randomly engages its synapses to transmit signals, but more in-depth studies have shown that the neuron is able to change the degree of impact on the target cell through the strength of its synapses. Depending on various factors, such as an insufficient amount of mediator (a substance released during chemical signal transfer), the neuron may not transfer a charge at all or start an excitation process. | |||

If a certain area - a chain of neurons - is frequently activated, a person begins to form reflexes by creating associative connections - complex electrical circuits. The more often and with greater force charges pass through a certain group of neurons, the greater the probability that the same connection will be involved in the future. This phenomenon can be compared to trampled trails in the forest: the wider and more even the road, the greater the chance that a person will walk along it. Thanks to associative connections a person forms memory. | |||

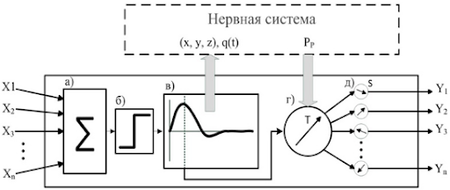

There is a mathematical model describing the work of a neuron (Fig. 1). | |||

The first stage (a)) - is the passage of signals (where x1, x2,...xn are signals, real numbers characterizing the strength of synapses) through the adder which determines the set of incoming signals. Then the obtained result passes through a threshold function (range of values: either 0 or 1), which determines whether the sum (answer: 1) of synaptic strength is sufficient to activate the neuron or not (answer:0) (b) and c)). Then there is a vector adjustment (d)) and reassessment of all synapses forces in the neuron (e)). That is, it is determined where and with what force the neuron will transmit the signal. <ref name="habr">https://habr.com/ru/post/383753/</ref> | |||

===Principle of artificial neural networks=== | |||

Artificial neural networks use a mathematical model similar to biological neural networks to solve various problems. Artificial neuron consists of three parts: a multiplier, an adder and a non-linear transducer. The multiplier is the analog of a synapse in a neuron cell, and its tasks are to connect neurons with each other and to multiply input signals by numbers which characterize the strength of connections (synapse weights). Then the adder adds up the input signals, and the non-linear transducer realizes the nonlinear function, also called activation function, of one argument - the adder output. | |||

[[File:5nn.png|350px|thumb|Figure 2. Types of neural networks by structure.]] | |||

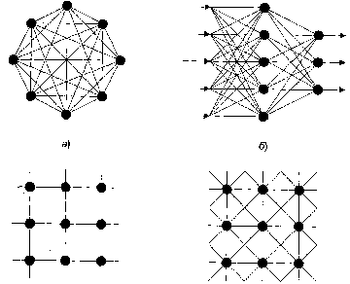

In other words, a neuron receives several numbers (coefficients) as input, then multiplies them with each other, and, depending on the result, produces one new number as output. This is the way one neuron works, while a neural network is a number of neurons connected to each other in a certain way. Neural networks are classified into three groups according to their connection method (Fig. 2): fully connected (a), layered (single- or multilayered) (b), and weakly connected (c). | |||

In multilayer neural networks, groups of neurons are combined into layers. All neurons in one layer receive identical signals, but process them differently. There can be any number of neurons in each layer. The network always has one input and one output layer, but the number of intermediate (hidden) layers can be any. Both the number of neurons and layers depend on the problem to be solved. Since complex networks require large computational power, it is not reasonable to create a large network for the solution of a simple problem. | |||

In the case of neural networks, the parameter is the weight of each synapse (neuron's connection to another neuron). Weights are usually denoted by the Latin letter "w". The values operated by neurons are in the range [0,1] or [-1,1]. The greater the weight relative to others, the more dominant position will be in the next neuron. | |||

If you want to make a neural network that will give individual recommendations for watching movies, you will first need to make tables/matrices that contain data about other users' ratings. For example, if you liked the movie - the value is "1", if you did not like it - "0". This will be the input layer. The hidden layers will be matrices displaying the contribution of different criteria (e.g. actor, director, genre) to the user's final score. Thus, a neural network is a formula, which inside itself is nothing more than just the multiplication of matrices and the task of this formula is to minimize the error by changing the value of weights. As developers in machine learning argue, at first the algorithms give completely random answers, but over time, by adjusting the coefficients the error in the answer gradually decreases, and at a certain point (for example, when the correct answer is 95%) the network is considered trained. If we draw an analogy with biological neural networks, then artificial neural networks also start to prefer certain sets of some neurons with certain coefficients. This is due to the fact that in the course of training the algorithms gradually sift out incorrect coefficients and combinations, which eventually leads to a better answer. <ref name="studfile">https://studfile.net/preview/3170620/page:3/</ref> <ref name="studfile6">https://studfile.net/preview/3170620/page:2/#6</ref> <ref name="studfile2">https://studfile.net/preview/3170620/page:2/</ref> <ref name="studfile8">https://studfiles.net/preview/3170620/page:3/#8</ref> <ref name="habr2">https://habr.com/post/312450/</ref> <ref name="youtu">https://youtu.be/po31nmBzbCY</ref> | |||

===Data Processing by Neural Networks=== | |||

To be processed by neural networks, data must be represented in the range [0,1] or [-1,1]. Not all data can initially be represented as zero and one or minus one and one. Activation functions are used to normalize the input data. There are many different activation functions, but the most commonly used are the sigmoid f(x)= [[File:1nn.png|40px]] and the hyperbolic tangent f(x)= [[File:2nn.png|40px]] . | |||

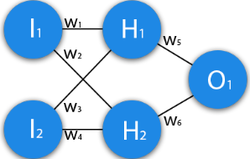

[[File:6nn.png|250px|thumb|Figure 3. Neuron network connection model.]] | |||

The work of the neural network can be considered on a simple task. | |||

<p>Given: I1=1, I2=0, w1=0.45, w2=0.78, w3=-0.12, w4=0.13, w5=1.5, w6=-2.3. | |||

<br>I - input neuron | |||

<br>w - weight | |||

<br>H - hidden neuron | |||

<br>O - output neuron</p> | |||

The solution: | |||

<br>1. H1 Input = 1*0.45+0*-0.12=0.45 two input signals are multiplied by the weights of the first neuron and added; | |||

<br>2. H1 output = sigmoid(0.45)=0.61 the sum goes through the activation function of the first neuron; | |||

<br>3. H2 input = 1*0.78+0*0.13=0.78 two input signals are multiplied by the weights of the second neuron and added together; | |||

<br>4. H2 output = sigmoid(0.78)=0.69 the sum goes through the activation function of the second neuron; | |||

<br>5. O1 input = 0.61*1.5+0.69*-2.3=-0.672 both results are multiplied by the weights of the output neuron and added together; | |||

<br>6. O1 output = sigmoid(-0.672)=0.33 the sum goes through the activation function; | |||

<br>7. O1 ideal = 1 (0xor1=1) is the perfect answer; | |||

<br>8. Error = ((1-0.33)^2)/1=0.45 error calculation with the "Mean Squared Error" formula: | |||

[[File:3nn.png|220px]] . | |||

The result is 0.33, the error is 45%. This is how all the calculations within the neural network happen. | |||

We can conclude that to create a trained neural network it is necessary to change the weights until the error is close to zero, and training consists only in adjusting the coefficients. Of all the machine learning algorithms today, the most advanced are those based on the principles of the human brain. <ref name="habr2">https://habr.com/post/312450/</ref> | |||

==Neural Networks in medical diagnosis and applications== | ==Neural Networks in medical diagnosis and applications== | ||

===Introduction and architecture of Neural Networks=== | ===Introduction and architecture of Neural Networks=== | ||

| Line 17: | Line 83: | ||

==Neural Networks application in forecasting natural hazards== | ==Neural Networks application in forecasting natural hazards== | ||

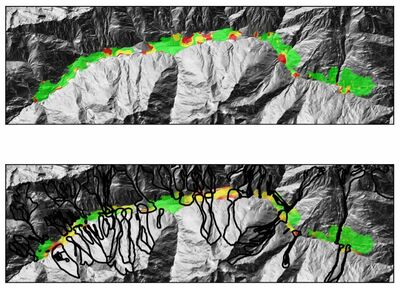

[[File:8nn.JPG|400px|thumb|Figure 4. The hazard zone map of Stanzer Valley (top) alongside with the prediction of the neuronal network | |||

(bottom). <ref name="maps">https://arxiv.org/pdf/1802.07257.pdf</ref>]] | |||

===ANN application in prediction and management of natural disasters=== | ===ANN application in prediction and management of natural disasters=== | ||

Disaster prediction based on ANN model can be a crucial part of pre-disaster management phase for disasters of climatic origin including flooding or drought. As disastrous flooding can be triggered by various factors such as rainfall, snowmelt, high tidal waves or failure of the river blockages, sufficient dataset on areas prone to flooding is required for successful forecasts. Data from the base sensors including temperature, humidity, rain fall, wind speed and under ground water level is used as input. ANN is previously trained with the use of selected training database consisting of flooding occurrences and non-occurrences. ANN forecasts probability of either occurrence or non-occurrence of flooding by processing the climatic data of examined area as an input. Test evaluation results are statistically precise. <ref name="flood">https://www.researchgate.net/publication/4185987_A_neural_network_based_prediction_model_for_flood_in_a_disaster_management_system_with_sensor_networks/</ref> | Disaster prediction based on ANN model can be a crucial part of pre-disaster management phase for disasters of climatic origin including flooding or drought. As disastrous flooding can be triggered by various factors such as rainfall, snowmelt, high tidal waves or failure of the river blockages, sufficient dataset on areas prone to flooding is required for successful forecasts. Data from the base sensors including temperature, humidity, rain fall, wind speed and under ground water level is used as input. ANN is previously trained with the use of selected training database consisting of flooding occurrences and non-occurrences. ANN forecasts probability of either occurrence or non-occurrence of flooding by processing the climatic data of examined area as an input. Test evaluation results are statistically precise. <ref name="flood">https://www.researchgate.net/publication/4185987_A_neural_network_based_prediction_model_for_flood_in_a_disaster_management_system_with_sensor_networks/</ref> | ||

| Line 69: | Line 140: | ||

<br>4. Sensors errors and accuracy | <br>4. Sensors errors and accuracy | ||

</p>There is no commonly agreed definition for the term “safety” in terms of machine learning or deep learning. <ref name="cars">https://arxiv.org/pdf/1910.07738.pdf</ref> | </p>There is no commonly agreed definition for the term “safety” in terms of machine learning or deep learning. <ref name="cars">https://arxiv.org/pdf/1910.07738.pdf</ref> | ||

<p></p> | |||

Neural networks, machine and deep learning tries to mimic human interactions within given scenario. Main focus for almost all neural networks Is to acquire human-like experience leaving behind human understanding of the situation. Sometimes dilemmas and problems require different angle of view, which can be provided by neural networks. | Neural networks, machine and deep learning tries to mimic human interactions within given scenario. Main focus for almost all neural networks Is to acquire human-like experience leaving behind human understanding of the situation. Sometimes dilemmas and problems require different angle of view, which can be provided by neural networks. | ||

| Line 84: | Line 155: | ||

====Politics==== | ====Politics==== | ||

Nowadays deepfake was already used many times to fake political propaganda. One of the most recent examples is a misleading speech by the prime minister of Belgium Sophie Wilmès. As a result, the video generated was featuring Sophie Wilmès claiming false statements about COVID-19. <ref name="brusselstimes">https://www.brusselstimes.com/news/belgium-all-news/politics/106320/xr-belgium-posts-deepfake-of-belgian-premier-linking-covid-19-with-climate-crisis/</ref> | Nowadays deepfake was already used many times to fake political propaganda. One of the most recent examples is a misleading speech by the prime minister of Belgium Sophie Wilmès. As a result, the video generated was featuring Sophie Wilmès claiming false statements about COVID-19. <ref name="brusselstimes">https://www.brusselstimes.com/news/belgium-all-news/politics/106320/xr-belgium-posts-deepfake-of-belgian-premier-linking-covid-19-with-climate-crisis/</ref> | ||

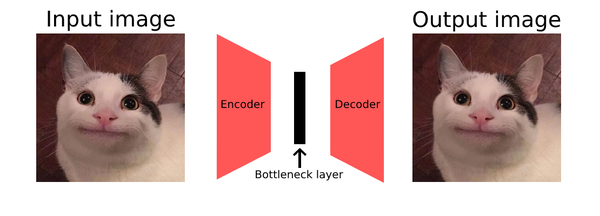

[[File:7nn.png|600px|thumb|Figure 5. Deepfakes are generated by autoencoder.]] | |||

===Deepfakes generating technology=== | ===Deepfakes generating technology=== | ||

====Autoencoders==== | ====Autoencoders==== | ||

Deepfakes nowadays are mainly generated by one type of neural network which is called autoencoder. This exact algorithm performs two tasks. At the first stage, it takes an input image and encodes it into a small set of numerical values. It encodes the image until reaching the bottleneck layer which contains the target number of variables needed to decode the image. The second stage is the reverse process of decoding variables from the bottleneck to recreate the original input image. Basically, the autoencoder itself is an encoder-decoder algorithm that you can train. To train the mechanism we need to provide the autoencoder with as many as possible images of one human’s face, for the perfect result we need to include pictures from all different angles and various lighting conditions. Ideally, we have to feed the algorithm with up to a couple of thousands of pictures. The autoencoder then will eventually find a way to encode one’s facial features in a smaller and smaller set of variables to make the process of decoding faster and more accurate. <ref name="bdtechtalks">https://bdtechtalks.com/2020/09/04/what-is-deepfake/</ref> <ref name="kdnuggets">https://www.kdnuggets.com/2018/03/exploring-deepfakes.html/2</ref> | Deepfakes nowadays are mainly generated by one type of neural network which is called autoencoder. This exact algorithm performs two tasks. At the first stage, it takes an input image and encodes it into a small set of numerical values. It encodes the image until reaching the bottleneck layer which contains the target number of variables needed to decode the image. The second stage is the reverse process of decoding variables from the bottleneck to recreate the original input image. Basically, the autoencoder itself is an encoder-decoder algorithm that you can train. To train the mechanism we need to provide the autoencoder with as many as possible images of one human’s face, for the perfect result we need to include pictures from all different angles and various lighting conditions. Ideally, we have to feed the algorithm with up to a couple of thousands of pictures. The autoencoder then will eventually find a way to encode one’s facial features in a smaller and smaller set of variables to make the process of decoding faster and more accurate. <ref name="bdtechtalks">https://bdtechtalks.com/2020/09/04/what-is-deepfake/</ref> <ref name="kdnuggets">https://www.kdnuggets.com/2018/03/exploring-deepfakes.html/2</ref> | ||

====Deepfake autoencoders==== | ====Deepfake autoencoders==== | ||

The way deepfake autoencoders works is using two trained autoencoders - one is trained to encode and decode the face of a person imitating the speech or action, and the other is trained to do the same thing with the face of a victim (for example an actor or a character). | The way deepfake autoencoders works is using two trained autoencoders - one is trained to encode and decode the face of a person imitating the speech or action, and the other is trained to do the same thing with the face of a victim (for example an actor or a character). | ||

When two autoencoders are properly trained, the mechanism switches outputs of encoders, passing decoder of a victim to the different person’s face and then comes out the desired product - a face of the victim is put onto the face of the person imitating for the video. Generating all that can take from several hours to multiple days depending on the amount of input given and the complexity of the desired result. <ref name="bdtechtalks">https://bdtechtalks.com/2020/09/04/what-is-deepfake/</ref> <ref name="kdnuggets">https://www.kdnuggets.com/2018/03/exploring-deepfakes.html/2</ref> | When two autoencoders are properly trained, the mechanism switches outputs of encoders, passing decoder of a victim to the different person’s face and then comes out the desired product - a face of the victim is put onto the face of the person imitating for the video. Generating all that can take from several hours to multiple days depending on the amount of input given and the complexity of the desired result. <ref name="bdtechtalks">https://bdtechtalks.com/2020/09/04/what-is-deepfake/</ref> <ref name="kdnuggets">https://www.kdnuggets.com/2018/03/exploring-deepfakes.html/2</ref> | ||

| Line 98: | Line 173: | ||

The other way is to find imperfections in the content created. The technology which generates deepfakes is working well, but it is not always perfect, so teaching AI to find these imperfections could also solve the problem. | The other way is to find imperfections in the content created. The technology which generates deepfakes is working well, but it is not always perfect, so teaching AI to find these imperfections could also solve the problem. | ||

At the moment of the year 2021, there still are ways to detect some deepfakes, but as they progress so fast it could be even impossible to notice whether the content is generated by AI or not. <ref name="technology">https://journalistsresource.org/politics-and-government/deepfake-technology-5-resources/</ref> | At the moment of the year 2021, there still are ways to detect some deepfakes, but as they progress so fast it could be even impossible to notice whether the content is generated by AI or not. <ref name="technology">https://journalistsresource.org/politics-and-government/deepfake-technology-5-resources/</ref> | ||

<p></p> | |||

Of course, this technology is inspirational and drives progress further, but everything always comes with a bad side. The social impact is already huge and we can only predict how good the algorithm will be in a few years from now. The main challenge that the Internet is going to face is the ability to verify and trace all the content that is being loaded online. Most likely it will be impossible to detect a DeepFake video in the future, but that does not say that the Internet will be flooded with fake content. We will have to face new privacy regulations, probably it will be way harder to post any content online since there will be a need to verify it legally. Obviously, we have to hope for the best and use our technology only in legally allowed ways, but we all understand that in the world we live right now we just can’t be sure whether technology will be used against us or not. | Of course, this technology is inspirational and drives progress further, but everything always comes with a bad side. The social impact is already huge and we can only predict how good the algorithm will be in a few years from now. The main challenge that the Internet is going to face is the ability to verify and trace all the content that is being loaded online. Most likely it will be impossible to detect a DeepFake video in the future, but that does not say that the Internet will be flooded with fake content. We will have to face new privacy regulations, probably it will be way harder to post any content online since there will be a need to verify it legally. Obviously, we have to hope for the best and use our technology only in legally allowed ways, but we all understand that in the world we live right now we just can’t be sure whether technology will be used against us or not. | ||

Latest revision as of 11:29, 2 May 2021

Neural networks as a type of machine learning

There are dozens of machine learning methods, such as clustering, regression, statistical classification, and others. For different tasks there is a different optimal solution method. According to Alexander Kraynov, a Yandex specialist and head of the computer vision service, "neural networks are now the most fashionable and interesting method of machine learning, which wins in most complex tasks.

Neural networks are a type of machine learning algorithm. Based on the fact that this method of machine learning is most often used in solving problems and most of the information (manuals, manuals, Internet courses, lectures) is devoted to it, we can conclude that neural networks are currently the most common and therefore the most popular type of machine learning. That is why it makes sense to study machine learning methods using neural networks as an example. [1]

Principle of biological neural networks

In their essence and structure artificial neural networks resemble the structure of the human nervous system, where each neuron either transmits an electrical charge (signal - action potential) to a neighboring neuron or not. In the article "How does our brain work or how to model the soul?" the author believes it is correct to represent the neuron's work not just as a calculator, but rather as an excitation retransmitter, which chooses the direction and strength of excitation propagation.

The structure of neurons and neural networks in the human brain is much more complex than artificial ones.

The main parts of a neuronal cell responsible for signal reception and transmission are the membrane, dendrites, synapses, and axons. The membrane surrounds the cell nucleus. At the ends of dendrites there are synapses - contact points between two neurons. Axon conducts excitation from neuron body to neuron. If we maximally simplify the formulation of neuron components' functions, the neuron membrane is a receiver, and synapses on dendrites are signal transmitters. Previously, it was thought that the neuron randomly engages its synapses to transmit signals, but more in-depth studies have shown that the neuron is able to change the degree of impact on the target cell through the strength of its synapses. Depending on various factors, such as an insufficient amount of mediator (a substance released during chemical signal transfer), the neuron may not transfer a charge at all or start an excitation process.

If a certain area - a chain of neurons - is frequently activated, a person begins to form reflexes by creating associative connections - complex electrical circuits. The more often and with greater force charges pass through a certain group of neurons, the greater the probability that the same connection will be involved in the future. This phenomenon can be compared to trampled trails in the forest: the wider and more even the road, the greater the chance that a person will walk along it. Thanks to associative connections a person forms memory.

There is a mathematical model describing the work of a neuron (Fig. 1).

The first stage (a)) - is the passage of signals (where x1, x2,...xn are signals, real numbers characterizing the strength of synapses) through the adder which determines the set of incoming signals. Then the obtained result passes through a threshold function (range of values: either 0 or 1), which determines whether the sum (answer: 1) of synaptic strength is sufficient to activate the neuron or not (answer:0) (b) and c)). Then there is a vector adjustment (d)) and reassessment of all synapses forces in the neuron (e)). That is, it is determined where and with what force the neuron will transmit the signal. [2]

Principle of artificial neural networks

Artificial neural networks use a mathematical model similar to biological neural networks to solve various problems. Artificial neuron consists of three parts: a multiplier, an adder and a non-linear transducer. The multiplier is the analog of a synapse in a neuron cell, and its tasks are to connect neurons with each other and to multiply input signals by numbers which characterize the strength of connections (synapse weights). Then the adder adds up the input signals, and the non-linear transducer realizes the nonlinear function, also called activation function, of one argument - the adder output.

In other words, a neuron receives several numbers (coefficients) as input, then multiplies them with each other, and, depending on the result, produces one new number as output. This is the way one neuron works, while a neural network is a number of neurons connected to each other in a certain way. Neural networks are classified into three groups according to their connection method (Fig. 2): fully connected (a), layered (single- or multilayered) (b), and weakly connected (c).

In multilayer neural networks, groups of neurons are combined into layers. All neurons in one layer receive identical signals, but process them differently. There can be any number of neurons in each layer. The network always has one input and one output layer, but the number of intermediate (hidden) layers can be any. Both the number of neurons and layers depend on the problem to be solved. Since complex networks require large computational power, it is not reasonable to create a large network for the solution of a simple problem.

In the case of neural networks, the parameter is the weight of each synapse (neuron's connection to another neuron). Weights are usually denoted by the Latin letter "w". The values operated by neurons are in the range [0,1] or [-1,1]. The greater the weight relative to others, the more dominant position will be in the next neuron.

If you want to make a neural network that will give individual recommendations for watching movies, you will first need to make tables/matrices that contain data about other users' ratings. For example, if you liked the movie - the value is "1", if you did not like it - "0". This will be the input layer. The hidden layers will be matrices displaying the contribution of different criteria (e.g. actor, director, genre) to the user's final score. Thus, a neural network is a formula, which inside itself is nothing more than just the multiplication of matrices and the task of this formula is to minimize the error by changing the value of weights. As developers in machine learning argue, at first the algorithms give completely random answers, but over time, by adjusting the coefficients the error in the answer gradually decreases, and at a certain point (for example, when the correct answer is 95%) the network is considered trained. If we draw an analogy with biological neural networks, then artificial neural networks also start to prefer certain sets of some neurons with certain coefficients. This is due to the fact that in the course of training the algorithms gradually sift out incorrect coefficients and combinations, which eventually leads to a better answer. [3] [4] [5] [6] [7] [8]

Data Processing by Neural Networks

To be processed by neural networks, data must be represented in the range [0,1] or [-1,1]. Not all data can initially be represented as zero and one or minus one and one. Activation functions are used to normalize the input data. There are many different activation functions, but the most commonly used are the sigmoid f(x)= ![]() and the hyperbolic tangent f(x)=

and the hyperbolic tangent f(x)= ![]() .

.

The work of the neural network can be considered on a simple task.

Given: I1=1, I2=0, w1=0.45, w2=0.78, w3=-0.12, w4=0.13, w5=1.5, w6=-2.3.

I - input neuron

w - weight

H - hidden neuron

O - output neuron

The solution:

1. H1 Input = 1*0.45+0*-0.12=0.45 two input signals are multiplied by the weights of the first neuron and added;

2. H1 output = sigmoid(0.45)=0.61 the sum goes through the activation function of the first neuron;

3. H2 input = 1*0.78+0*0.13=0.78 two input signals are multiplied by the weights of the second neuron and added together;

4. H2 output = sigmoid(0.78)=0.69 the sum goes through the activation function of the second neuron;

5. O1 input = 0.61*1.5+0.69*-2.3=-0.672 both results are multiplied by the weights of the output neuron and added together;

6. O1 output = sigmoid(-0.672)=0.33 the sum goes through the activation function;

7. O1 ideal = 1 (0xor1=1) is the perfect answer;

8. Error = ((1-0.33)^2)/1=0.45 error calculation with the "Mean Squared Error" formula:

![]() .

.

The result is 0.33, the error is 45%. This is how all the calculations within the neural network happen.

We can conclude that to create a trained neural network it is necessary to change the weights until the error is close to zero, and training consists only in adjusting the coefficients. Of all the machine learning algorithms today, the most advanced are those based on the principles of the human brain. [7]

Neural Networks in medical diagnosis and applications

Introduction and architecture of Neural Networks

It is shown that ANN can be effective both in patterns and trends detection therefore can be successfully implemented in forecasting and predictions. ANN are capable of mapping input data pattern to corresponding output structure. Additionally, they exhibit an ability to recall entire structure out of incomplete and faulty patterns. Feed-forward ANN in comparison to feedback networks are less complex and have signals traveling in one direction only from input to output. Feedback ANN are more powerful and dynamic. In addition, they include feedback loops in signals’ trajectory meaning signals moving both ways between input and output which makes them extremely complicated yet allows more capabilities. [9] Since ANN were proven to be successfully implemented for data pattern recognition, they can be applied in various industries that require forecasts or predicting.

Training process and database for medical diagnosis

One of the core principles of neural networks’ functionality is training process based on a corresponding database. For ANN to undergo learning procedure for medical diagnosis, a sufficient dataset in a form of table or matrix with known diagnosed patients’ condition analysis is required. Medical data including symptoms and laboratory data is used as input for artificial neural network. Training process is continued until a minimum amount of data is processed as a base. The procedure is followed by additional checks and verification. The examples of patients used for the learning should be reliable for ANN generalization to be more precise in forthcoming predictions. Dataset used for final verification should differ from dataset that was initially used for training. Otherwise, verification results should not be considered as valid. Finally, ANN evaluations should be firstly tested in medical practice by a clinician and in case of successful prediction are added to learning database of ANN. [10]

ANN application in diabetes and cancer diagnosis

ANN based diagnostics system for diabetes detection was firstly introduced in 2011. Medical data of 420 patients including rate of change of heart rate and physiological parameters was used as a base both for training and final evaluation verification. Additionally, ANN can be applied to control optimal level of blood glucose and insulin for diabetic patients according to trajectory relevant for healthy individuals without the disease. Within this control system fuzzy logic algorithm is implemented. Implementing ANN for identifying different cancer types or predicting possibility of their further development based on patients’ input data was suggested back in the late 1990s. Being an effective tool for pattern recognition, ANN is suitable both for classifications and clustering. [11] Supervised models of ANN are applied for classification of gene expression and unsupervised models can be implemented for distinguishing a pattern in a set of unlabelled data. Supervised learning requires a teacher to be involved to minimize possible error rate. Training is a very time-consuming process. As a result, according to research most of ANN allow to diagnose various types of cancer at early stage and with proven accuracy. However, the drawback of using this technology remains in extensive training time. [10] Documented accuracy of correct predictions in terms of tumour recurrence rate done by neural networks is 960 out of 1008 cases. Probable recurrence forecast can be made based on the following set if data such as tumour size and hormone receptor status as well as the number of palpable lymphatic nodules. In addition to identification of tumours, scientific research is held to make the application of ANN possible for diagnosis of recently emerged diseases such as Swine Flu, Brain Fever and Chicken Guinea.

Instant Physicians and Electronic Noses

Instant Physician application was under development back in the 1980s and was designed to determine the most probable diagnosis together with the best offered treatment based on the analysis of a set of symptoms used as initial input. Electronic Noses are meant to be implement in tele-present surgery. Identifying a particular smell during an operation can be crucial for a surgeon working remotely therefore it can be done with the help of an Electronic Nose capable of distinguishing odours that can be then electronically channelled from the surgical environment and regenerated for a tele-present surgeon. Additionally, these devices are applied in research and development laboratories. [9]

Neural Networks application in forecasting natural hazards

ANN application in prediction and management of natural disasters

Disaster prediction based on ANN model can be a crucial part of pre-disaster management phase for disasters of climatic origin including flooding or drought. As disastrous flooding can be triggered by various factors such as rainfall, snowmelt, high tidal waves or failure of the river blockages, sufficient dataset on areas prone to flooding is required for successful forecasts. Data from the base sensors including temperature, humidity, rain fall, wind speed and under ground water level is used as input. ANN is previously trained with the use of selected training database consisting of flooding occurrences and non-occurrences. ANN forecasts probability of either occurrence or non-occurrence of flooding by processing the climatic data of examined area as an input. Test evaluation results are statistically precise. [13]

Prediction of natural hazards with Neural Networks

One of the cornerstones of preventive measures against natural hazards are hazard zone maps. According to the colour coding vulnerable habitable areas are marked in yellow and highly vulnerable areas are marked in red accordingly. This model was firstly introduced in 1975 in Austria. The process of creating hazard zone maps is complicated and expensive. For this reason, there are many areas and regions for which detailed information is not there. Artificial Neural Networks can be used to learn from already existing hazard zone maps and consequently generate them for other areas based on the previously gained knowledge. One of the drawbacks of this process is required amount of training dataset needed for sufficient and successful outcome of the training. Learning methods can be optimized with the application of unsupervised learning model hence allow smaller sets of training data while keeping up the required quality of the results. [12] Currently most scientific studies of natural disasters focus on a single hazard at a time during the research. This approach does not take into consideration relationships and co-dependency of multiple hazards therefore might inevitably lead to miscalculations of possible risks. For this reason, ANN being a powerful tool for analysis of large datasets, is suitable for approaches observing multi-hazard relationships and documented information about interactions between several extreme natural events and considering individual as well as collective risks triggered by several hazards. [14]

Prediction of seismic hazards with the use of ANN

Earthquake events are dependent on multiple variables to be considered for the efficient forecasting process. Among the key input values for further analysis is ground motion data. One of the recent studies, implementing machine learning for earthquake predictions, was held in Chile and included the use of immense database of 86 thousand seismic events’ records that took place in Chile between 2000 and 2017. The results of the experiment are considered satisfactory for successful predictions of seismic events in the future. [15]

Neural Networks and Self-Driving Cars

The last decade witnessed increasingly rapid progress in self-driving vehicle technology, mainly backed up by advances in the field of deep learning and artificial intelligence. AI-based self-driving architectures, convolutional and recurrent neural networks, deep reinforcement learning paradigm, motion algorithms are only the logical part of the self-driving car. These methodologies are shaping how computer percepts the driving environment, draws out the voyage path, behaves on the road.

Self-Driving Car basics

Data gathered by on-board sources like LiDARs (Light Detection and Ranging), ultra-sonic sensors, GPS and inertial sensors is passed to the computer and is used to make driving decisions. Driving decision computation is made on a modular perception-planning-action pipeline, End2End learning (sensory information is straightly forwarded to control outputs). Modular pipeline can consist of AI and deep learning or classical non-learning methods. Various combinations of learning and non-learning methods are also utilized. Modular pipeline can be divided into four components that uses AI and deep learning and/or classical methods:

1. Perception and Localization

2. High-Level Path Planning

3. Behavior Arbitration, or low-level path planning

4. Motion Controllers

These four components provide relevant data for autonomous driving system. When they voyage rout is inserted, the autonomous driving system first task is to determine current location, direction that autonomous driving system and overall “read” the surrounding. Second step, when current position regarding the surrounding is determined, is to mark optimal path, actions that are made during the voyage are predetermined by the behavior arbitration system. This would work perfectly on paper, but roads are rarely straight open spaces with no traffic, so motion controllers reactively respond and correct the path and makes changes in the behavior of driving. [16]

Deep Learning Technologies

Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN) and Deep Reinforcement Learning (DRL) are most common deep learning technologies that are utilized in autonomous driving vehicles.

Convolutional Neural Networks

Convolutional Neural Networks (CNN) main purpose is to process visual information, in example image including image features extractor and all-purpose non-linear function approximators. Convolutional Neural Networks can be compared to the mammal visual cortex. Information in the human brain is processed by the dual flux theory, visual flow goes by two fluxes: a ventral flux is in charge of identification and object recognition, dorsal flux determines distance in relation between objects. Convolutional Neural Networks imitates the functions of the ventral flux, recognizing object and their shapes. Shapes and object patterns are learned during “education” of the neural network. Image with recognized patterns and objects is then overlaid over actual camera image as a set of vectors or filters. [16]

Recurrent Neural Networks

Recurrent Neural Networks most effective feature is making calculations with temporal sequence data, in example text or video streams. Different from conventional neural networks, a RNN contains a time de-pendent feedback loop in its memory cell. In other words, Recurrent Neural Networks are used to get fast response for the input data. Main downside using Recurrent Neural Networks is that this network is not suitable for capturing long-term dependencies in sequence data.

Deep Reinforcement Learning

Deep Reinforcement Learning as an autonomous driving task is using Partially Observable Markov Decision Process. In case of Partially Observable Markov Decision Process (POMDP) autonomous driving vehicle is provided with voyage path and real-time voyage data coming from on-board sensory equipment and depending on provided data it must make and learn decisions to finish the voyage successfully with no errors and collisions. At the start of POMDP neural network has some set of rules that are required to reach the end destination, due to constant occurring obstacles such as other cars, traffic lights, the neural network has to make decisions and overcome the traffic obstacles during the voyage using previously gathered data. [16]

Perception and Localization

Self-driving suite of neural networks enables vehicles to navigate autonomously by receiving environmental data, processing it and taking actions regarding received output.

Camera and LiDAR

Deep learning is well performing on detection and recognition objects in 2D and 3D views acquired by the video cameras and LiDAR. 3D image is in most cases based on LiDAR sensors, that provide 3D representation of the surrounding objects. Performance of a LiDAR is measured in field of view, usually LiDAR has a 360° horizontal field of view. To operate at relatively high speeds, an autonomous vehicle requires a minimum of 200 meters range. The 3D object detection error is defined by the resolution of the sensor, most advanced LiDAR is capable of a 3cm accuracy. Taking into account that LiDAR uses laser to determine shapes and distances it is vulnerable to bad weather conditions. Cameras provide 2D image and are cost efficient, but a major downside of using cameras is that they lack the depth of perception and do not work in insufficient lumination. Cameras and LiDAR share the same disadvantage, bad weather restricts their usability. [16]

Path Planning, Behavior

Path planning main function is to establish a route between starting and the end point. Due to usage of a neural network path planning extends to a a matter, where all possible obstacles that are present in the surrounding are taken in the consideration. During the voyage autonomous driving vehicle Behavior Arbitration in real-time chooses a collision-free solution for the vehicle depending on the circumstance on the road.

Motion Controllers

The Motion Controller is in charge for calculating the longitudinal and lateral navigating commands of the vehicle. Motion Controller utilizes End2End Control System, which forwards sensory data to steering. End2End Control System is crucial when operating at high-speed situations, where time is a determining factor.

Learning Controllers

Autonomous systems controllers operate on fixed given input data of mechanical characteristics. Learning Controllers on the other hand utilizes training information alongside the fixed given input, due to unknown sensory factors during usage. In the field of autonomous driving, End2End Learning Controller is provided with high-dimensional input. This method differs from traditional processing pipeline, where objects are detected, after which a path is planned, and the computed control data is executed.

Safety Concerns

Due to lack of computing power of current hardware, the neural networks can’t predict all cases of the situation during usage. Safety system depends on several factors:

1. What learning techniques are implemented

2. Surrounding environmental condition

3. Weather condition

4. Sensors errors and accuracy

There is no commonly agreed definition for the term “safety” in terms of machine learning or deep learning. [16]

Neural networks, machine and deep learning tries to mimic human interactions within given scenario. Main focus for almost all neural networks Is to acquire human-like experience leaving behind human understanding of the situation. Sometimes dilemmas and problems require different angle of view, which can be provided by neural networks.

Neural Networks as a fake synthetic media

History of Deepfake

In the last few years, the whole world witnessed the true power of neural networks in a media way in which a person’s face on a picture or video is replaced with someone else’s likeness. If you will give artificial intelligence enough content and examples of a task, it will be able to replicate human emotions and mimic thus creating fake realistic content of someone. Methods of creating this content are based on artificial intelligence deep learning and generative neural network techniques. [17] Technology was initially developed in the 1990s and later was available for public use online. Deepfakes grabbed people’s attention due to their wide range of use. In 2018 it gained its peak popularity due to the fact that even the average online enthusiast could generate a deepfake using publicly available apps. [18]

Applications of Deepfake

Social media

Deepfakes are really popular on various social media platforms. Since low and medium-quality apps are available to all average internet users, they are frequently used either for mimicking themselves or just to make fun. A fine example is a 2020 meme trend, where people used to generate videos of characters or real people singing a song from the video game series “Yakuza”. [19]

Movies

Since nowadays even the average internet user can try themselves in creating a deepfake, imagine the possibilities of an entire mass media company. The technology is already often used in film production. A great example could be a CGI of famous “Star Wars” character Leia Skywalker in recent films. Many predict that the image generated by AI is the future of film production that could make it cheaper and way faster. [20]

Pornography

A big amount of deepfakes feature pornography content of people. As stated in the 2019 Deepfake Report by “DeepTrace - 96% of all deepfake generated content was pornographic. The big reason for that could be an app, that was available for Linux and Windows OS that was made specifically to “undress” people on photos. The app was later removed, yet its existence still says a lot about our society. [21]

Politics

Nowadays deepfake was already used many times to fake political propaganda. One of the most recent examples is a misleading speech by the prime minister of Belgium Sophie Wilmès. As a result, the video generated was featuring Sophie Wilmès claiming false statements about COVID-19. [22]

Deepfakes generating technology

Autoencoders

Deepfakes nowadays are mainly generated by one type of neural network which is called autoencoder. This exact algorithm performs two tasks. At the first stage, it takes an input image and encodes it into a small set of numerical values. It encodes the image until reaching the bottleneck layer which contains the target number of variables needed to decode the image. The second stage is the reverse process of decoding variables from the bottleneck to recreate the original input image. Basically, the autoencoder itself is an encoder-decoder algorithm that you can train. To train the mechanism we need to provide the autoencoder with as many as possible images of one human’s face, for the perfect result we need to include pictures from all different angles and various lighting conditions. Ideally, we have to feed the algorithm with up to a couple of thousands of pictures. The autoencoder then will eventually find a way to encode one’s facial features in a smaller and smaller set of variables to make the process of decoding faster and more accurate. [18] [19]

Deepfake autoencoders

The way deepfake autoencoders works is using two trained autoencoders - one is trained to encode and decode the face of a person imitating the speech or action, and the other is trained to do the same thing with the face of a victim (for example an actor or a character). When two autoencoders are properly trained, the mechanism switches outputs of encoders, passing decoder of a victim to the different person’s face and then comes out the desired product - a face of the victim is put onto the face of the person imitating for the video. Generating all that can take from several hours to multiple days depending on the amount of input given and the complexity of the desired result. [18] [19]

Society issues with Deepfakes

The one big problem with deepfakes is that this technology can easily manipulate us to think that a person on the video is real. Sometimes people underestimate the power of modern AI technologies and forget that nowadays these things are possible. Political propaganda, identity fraud, misleading speeches, fake pornography - all that is possible with enough of face content of a “victim”. Many platforms already banned everything associated with DeepFake’s content. It all could be fun and games but using one’s face in a video without one consent is most likely considered illegal. People are already losing trust in the content online since there are lots of unverified sources and the only way to properly confirm a well-made DeepFake is directly asking the person whose face is used in the video. [23]

Detecting the Deepfakes

Both government and social media companies are worried about the influence of deepfakes on our society and privacy. Many companies such as Microsoft and Facebook are launching and designing tools that could help us to detect deepfakes. [18] One way to detect deepfakes is to train AI to spot a human behaviour of a person on the video. We, as humans, all have our own way of expressing ourselves: gestures, specific face patterns, the way our body moves. All these features can be used against deepfakes and could possibly help us to detect them. The other way is to find imperfections in the content created. The technology which generates deepfakes is working well, but it is not always perfect, so teaching AI to find these imperfections could also solve the problem. At the moment of the year 2021, there still are ways to detect some deepfakes, but as they progress so fast it could be even impossible to notice whether the content is generated by AI or not. [24]

Of course, this technology is inspirational and drives progress further, but everything always comes with a bad side. The social impact is already huge and we can only predict how good the algorithm will be in a few years from now. The main challenge that the Internet is going to face is the ability to verify and trace all the content that is being loaded online. Most likely it will be impossible to detect a DeepFake video in the future, but that does not say that the Internet will be flooded with fake content. We will have to face new privacy regulations, probably it will be way harder to post any content online since there will be a need to verify it legally. Obviously, we have to hope for the best and use our technology only in legally allowed ways, but we all understand that in the world we live right now we just can’t be sure whether technology will be used against us or not.

References

- ↑ http://machinelearning.ru/wiki/index.php?title

- ↑ https://habr.com/ru/post/383753/

- ↑ https://studfile.net/preview/3170620/page:3/

- ↑ https://studfile.net/preview/3170620/page:2/#6

- ↑ https://studfile.net/preview/3170620/page:2/

- ↑ https://studfiles.net/preview/3170620/page:3/#8

- ↑ 7.0 7.1 https://habr.com/post/312450/

- ↑ https://youtu.be/po31nmBzbCY

- ↑ 9.0 9.1 http://www.ijmer.com/papers/vol%201%20issue%201/H011057064.pdf

- ↑ 10.0 10.1 https://www.researchgate.net/publication/250310836_Artificial_neural_networks_in_medical_diagnosis/

- ↑ https://www.sciencedirect.com/science/article/pii/S1877050915023613/

- ↑ 12.0 12.1 https://arxiv.org/pdf/1802.07257.pdf

- ↑ https://www.researchgate.net/publication/4185987_A_neural_network_based_prediction_model_for_flood_in_a_disaster_management_system_with_sensor_networks/

- ↑ https://www.nature.com/articles/s41598-020-69233-2/

- ↑ https://www.intechopen.com/books/natural-hazards-risk-exposure-response-and-resilience/assessing-seismic-hazard-in-chile-using-deep-neural-networks/

- ↑ 16.0 16.1 16.2 16.3 16.4 https://arxiv.org/pdf/1910.07738.pdf

- ↑ https://www.sciencedirect.com/science/article/abs/pii/S0007681319301600?via%3Dihub/

- ↑ 18.0 18.1 18.2 18.3 https://bdtechtalks.com/2020/09/04/what-is-deepfake/

- ↑ 19.0 19.1 19.2 https://www.kdnuggets.com/2018/03/exploring-deepfakes.html/2

- ↑ https://www.kdnuggets.com/2018/03/exploring-deepfakes.html

- ↑ https://regmedia.co.uk/2019/10/08/deepfake_report.pdf

- ↑ https://www.brusselstimes.com/news/belgium-all-news/politics/106320/xr-belgium-posts-deepfake-of-belgian-premier-linking-covid-19-with-climate-crisis/

- ↑ https://www.alanzucconi.com/2018/03/14/the-ethics-of-deepfakes/

- ↑ https://journalistsresource.org/politics-and-government/deepfake-technology-5-resources/