Onions can make people cry

Intro

This wiki will cover the most important aspects of Tor: the free and open-source software that enables anyone to communicate anonymously on the internet. The article will contain a little about the deep, dark and surface web, why use Tor and what is for, the history of Tor, a closer look on how Tor actually works and the legal and illegal aspects of the web browser.

The three webs

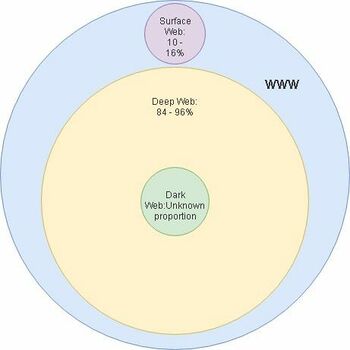

The World Wide Web (WWW) consists of multiple parts named: deep, dark and surface web.

Surface web

The surface web is the easiest to explain as that is the part of the web that all of us use to browse the internet, even right now as you are reading this wiki you are browsing the surface web. The different areas of the web are defined by if they are indexed and if the user needs to authenticate himself to access the webpage. The surface web mostly consists of web pages that do not need user authentication. The web pages are indexed, meaning that a user can find the pages from using a search engine. The surface web contains about 10-16% of all of the information on the WWW. (9)

Deep web

Everything except the surface makes up the deep web part of the WWW. This means that the dark web is also a part of the deep web. Most of the deep web is inaccessible to normal search engines since the pages are not indexed or they require authentication to access. To access the deep web users need to use a special browser like Tor. Users also need to use the Hidden Wiki to get the urls for the different webpages, so the Hidden Wiki is kind of like a gateway into the deep web. The deep web contains about 84-90% of all of the information on the WWW. (9)

Dark web

And now finally, the dark web. The dark web is a part of the deep web and it’s full size is not actually known. Security experts estimate that there are about 10,000 to 100,000 active sites in the dark web at any given moment. Since the dark web is a part of the deep web it’s pages are not indexed and cannot be found by search engines. The dark web is also the part of the web where most illegal drugs, guns and human trafficking happens, but governments and the UN has also used the dark web to protect political dissidents and hunt down criminals. A great example of this is how the US government tracked down the owner of Silk Road (an underground black market primarily for drugs), Ross Ulbricht in 2013. (9)

Why use Tor

So after all of that why would you use The Onion Router (Tor)? So using Tor is similar to any other web browser, except your real IP address and any other system information is obscured when browsing the web. It also hides the user’s activity from their internet service provider.

The primary uses of Tor are:

- Bypassing censorship and surveillance

- Visiting websites anonymously

- Accessing Tor hidden services (.onion sites)

We will get into more detail about Tor and how it works later on. (9)

History

The 1990s

So, now that we have the basics covered let’s look at an overview of the history of The Onion Router (Tor). The idea of onion routing began in the mid 1990s and the developers of the project believed that internet users should have private access to an uncensored web. In the 1990s internet security was a really big issue and its ability to be used for tracking and surveillance was becoming clear. Therefore, 3 US Naval Research Lab (NRL) employees: Paul Sysverson, David Goldschlag and Mike Reed decided to create and deploy the first research designs and prototypes of onion routing. From its inception in the 1990s, onion routing relied on a decentralized network that used nodes that were operated by different entities with diverse interests and trust assumptions. Also the software needed to be free and open-source to maximize transparency and separation. (8)

The 2000s

In the early 2000s, Roger Dingledine, a MIT graduate, started working on a NRL onion routing project with Paul Syverson. Other onion routing efforts were already made, so to make this project special, they decided to call it Tor - The Onion Router. The Electronic Frontier Foundation (EFF) recognized the benefit of Tor for digital rights and began funding the project in 2004 and in 2006 the Tor Project was founded to maintain Tor’s development. In 2007, the organization began to connect to the Tor network to address censorship, such as the need to get around government firewalls, in order for its users to access the open web. Tor was mostly popular with tech-savvy people and it was hard for less technically knowledgeable people to use, so in 2005, development of tools for the Tor proxy began and in 2008, development of the Tor browser began. (8)

The 2010s

With the Tor browser complete and it being more accessible to everyday internet users, it greatly influenced the beginning of the Arab Spring in late 2010. It did not only protect people’s identity online, but it also helped access critical resources, social media and other websites which were blocked at the time. After the 2013 Snowden revelations, it became clear that the need for tools against mass surveillance would become a mainstream concern. Tor was used for Snowden’s whistleblowing and the documents posted online also assured that Tor could not be cracked. (8)

The onion router - A closer look

In order to give a clearer picture of what Tor is and how it works, this part will cover what traffic analysis is, how the onion router operates, problems related to anonymity when using Tor and what onion services are.

Traffic analysis: the art of tracking users

In order to understand Tor and it works, the term and working principles of traffic analysis must be understood.

Traffic analysis is a form of Internet surveillance used to monitor and inspect network traffic from an end device to another over a public network. This technique can let people and services listen on a network to discover the user’s source and destination traffic, leading to the tracking of personal interests and behavior, even when the connection is encrypted. This is possible due to the structure of Internet data packets: data payload, which contains the content of what is being sent over the network such as an email or an image, and a header that is used for routing. Of these two parts data payload can be encrypted, but since traffic analysis focuses on the header rather than the content of the message, it still can disclose key information about the user, such as source and destination, size of the payload, and timing. This enables unauthorized parties as well as authorized intermediaries (e.g Internet Service Providers) to view a user’s information not from the content of the data itself but from the Internet headers. Very simple forms of traffic analysis may allow a middle man to sit in between the sender and the receiver and inspect on the travelling data. (1)

Tor's working principles

The main purpose of Tor is to protect its users against traffic analysis. To do so the onion router distributes any of the user’s transactions over several nodes on the Internet, also called relays, making it impossible to link back a crossed point to a person’s information. What Tor does to obtain anonymity is sending data packets through several randomly chosen relays instead of choosing the direct route from source to destination. Using this technique no observer waiting at any of the relays can surely know where the data came from or where it is going. The circuit data takes is built incrementally one hop at a time. The user’s software encrypts all of the connections existing between relays on the network, where each of the relays knows only about the node it is taking data from and the node it is sending data to. To ensure that each hop cannot trace previous or further connections, a new pair of encryption keys is negotiated at every relay. Doing so neither an eavesdropper, nor a compromised node, nor any software, can use traffic analysis techniques to find the user’s connection source and destination. Since Tor aims for reliability it only works on TCP streams and for efficiency it uses the same circuit for connections that happen within more or less the same ten minutes. All of the later requests are assigned new circuits. (1)

Tor’s working principles can be summarized in three steps:

- The user’s Tor client obtains a list of relays from a directory server. (

- The user’s Tor client selects a random path from source to destination, where the path is chosen one hop at a time. (4)

- If a different site from the previous one is at a later time visited, the user’s Tor client picks a second random path. (5)

The onion services

Tor is not used just to browse the Internet anonymously, but to also access “special” services that together make up the dark web. These services are called the onion services and can only be accessed over Tor. (7)

Onion services benefit from many advantages that some other non-onion services may lack such as end-to-end encryption, end-to-end authentication, location hiding, and NAT punching (instead of using ports services punch through NAT). To achieve all this, Tor uses a special protocol called the Onion Service Protocol. Instead of consisting of a 32 or 128 bit IP address (depending on the IP version) an onion service makes use of its identity public key with a .onion extension. With the help of the Tor network the Onion Service Protocol allows a client to firstly introduce himself to the onion service and then together establish a meeting point, where the client will access the service. (7)