Exam help

Computer hardware

Different buses and their uses

In computer architecture, a bus is a communication system that transfers data between components inside a computer, or between computers. This expression covers all related hardware components (wire, optical fiber, etc.) and software, including communication protocols.

The internal bus, also known as internal data bus, memory bus, system bus or Front-Side-Bus, connects all the internal components of a computer, such as CPU and memory, to the motherboard. Internal data buses are also referred to as a local bus, because they are intended to connect to local devices. This bus is typically rather quick and is independent of the rest of the computer operations.

The external bus, or expansion bus, is made up of the electronic pathways that connect the different external devices, such as printer etc., to the computer.

Types and uses:

- USB

- Universal Serial Bus. Designed for: input devices, digital cameras, printers, media players...

- Serial ATA

- Used by internal storage devices (hard disk). They replace the old ATA connectors.

- PCI

- Peripheral Component Interconnect, is a local computer bus for attaching hardware devices in a computer. Attached devices can take either the form of an integrated circuit fitted onto the motherboard itself or an expansion card that fits into a slot. Typical PCI cards used in PCs include: network cards, sound cards, modems, extra ports such as USB or serial, TV tuner cards and disk controllers.

- PCI Express

- Peripheral Component Interconnect Express (also called PCIe), is a high-speed serial computer expansion bus standard designed to replace the older PCI, PCI-X, and AGP bus standards. PCIe has numerous improvements over the older standards, including higher maximum system bus throughput, lower I/O pin count and smaller physical footprint, better performance scaling for bus devices, a more detailed error detection and reporting mechanism, and native hot-plug functionality. More recent revisions of the PCIe standard provide hardware support for I/O virtualization.

- Mini PCIe

- It is based on PCI Express technoogy. Main point is its small size and its large variety of connectors makes it used for USB2.0 cards, SIM card, Wifi and Bluetooth cards, 3G and GPS cards.

- ExpressCard

- It is an interface to connect peripheral devices to a computer, usually a laptop computer. ExpressCards can connect a variety of devices to a computer including mobile broadband modems, IEEE 1394 (FireWire) connectors, USB connectors, Ethernet network ports, Serial ATA storage devices, solid-state drives, external enclosures for desktop-size PCI Express graphics cards and other peripheral devices, wireless network interface controllers (NIC), TV tuner cards, Common Access Card (CAC) readers, and sound cards.

What are the differences between hard disk drive (HDD) and solid state drive (SSD)?

The traditional spinning hard drive (HDD) is the basic nonvolatile storage on a computer. Hard drives are essentially metal platters with a magnetic coating which stores the data. A read/write head on an arm accesses the data while the platters are spinning in a hard drive enclosure. An SSD does same the job as an HDD, but instead of a magnetic coating on top of platters, the data is stored on interconnected flash memory chips that retain the data even when there's no power present. HDDs have spinning plates with magnetic coating, while SSDs have no moving parts and instead are using flash memory.

| Attribute | SSD (Solid State Drive) | HDD (Hard Disk Drive) |

|---|---|---|

| Power Draw / Battery Life | Less power draw, averages 2 – 3 watts, resulting in 30+ minute battery boost | More power draw, averages 6 – 7 watts and therefore uses more battery |

| Cost | Expensive, roughly $0.10 per gigabyte (based on buying a 1TB drive) | Only around $0.06 per gigabyte, very cheap (buying a 4TB model) |

| Capacity | Typically not larger than 1TB for notebook size drives; 1TB max for desktops | Typically around 500GB and 2TB maximum for notebook size drives; 6TB max for desktops |

| Operating System Boot Time | Around 10-13 seconds average bootup time | Around 30-40 seconds average bootup time |

| Noise | There are no moving parts and as such no sound | Audible clicks and spinning can be heard |

| Vibration | No vibration as there are no moving parts | The spinning of the platters can sometimes result in vibration |

| Heat Produced | Lower power draw and no moving parts so little heat is produced | HDD doesn’t produce much heat, but it will have a measurable amount more heat than an SSD due to moving parts and higher power draw |

| Failure Rate | Mean time between failure rate of 2.0 million hours | Mean time between failure rate of 1.5 million hours |

| File Copy / Write Speed | Generally above 200 MB/s and up to 550 MB/s for cutting edge drives | The range can be anywhere from 50 – 120MB / s |

| Encryption | Full Disk Encryption (FDE) Supported on some models | Full Disk Encryption (FDE) Supported on some models |

| File Opening Speed | Up to 30% faster than HDD | Slower than SSD |

| Magnetism Affected? | An SSD is safe from any effects of magnetism | Magnets can erase data |

What is the purpose of Flash Translation Layer in terms of solid state drives?

Flash drives have limited lifespan due to write cycles (or rather program-erase (PE) cycles) and to exhaust the drive equally, FTL layer is utilised.

Although presenting an array of Logical Block Addresses (LBA) makes sense for HDDs as their sectors can be overwritten, it is not fully suited to the way flash memory works. For this reason, an additional component is required to hide the inner characteristics of NAND flash memory and expose only an array of LBAs to the host. This component is called the Flash Translation Layer (FTL), and resides in the SSD controller. The FTL is critical and has three main purposes: logical block mapping, wear leveling and garbage collection.

The logical block mapping translates logical block addresses (LBAs) from the host space into physical block addresses (PBAs) in the physical NAND-flash memory space. This mapping takes the form of a table, which for any LBA gives the corresponding PBA.

Wear leveling (also written as wear levelling) is a technique[1] for prolonging the service life of some kinds of erasable computer storage media, such as flash memory (used in solid-state drives (SSDs) and USB flash drives).

The garbage collection process in the SSD controller ensures that “stale” pages are erased and restored into a “free” state so that the incoming write commands can be processed. If the data in a page has to be updated, the new version is written to a free page, and the page containing the previous version is marked as stale. When blocks contain stale pages, they need to be erased before they can be written to.

What are difference between volatile/non-volatile, RAM, ROM, EEPROM and where are they used?

Volatile = does not hold data after power off. Non-volatile = holds data even after power off.

Random Access Memory or RAM is a form of data storage that can be accessed randomly at any time, in any order and from any physical location, allowing quick access and manipulation. RAM allows the computer to read data quickly to run applications. It allows reading and writing. It is volatile.

Read-only memory or ROM is also a form of data storage that can not be easily altered or reprogrammed. Stores instuctions that are not nescesary for re-booting up to make the computer operate when it is switched off. They are hardwired. ROM stores the program required to initially boot the computer. It only allows reading. It is non-volatile.

EEPROM (electrically erasable programmable read-only memory) is user-modifiable read-only memory (ROM) that can be erased and reprogrammed. Unlike EPROM chips, EEPROMs do not need to be removed from the computer to be modified. However, an EEPROM chip has to be erased and reprogrammed in its entirety, not selectively. It also has a limited life - that is, the number of times it can be reprogrammed is limited to tens or hundreds of thousands of times. BIOS of PCs are usually written to EEPROM.

What is data retention?

It is how long a device can hold data before it becomes unreadable. SSD specifications show a data retention of at least one year for personal devices.

Data retention is also the time a company must (or can) retain informations/logs about its users/customers/suppliers by law.

What are difference between asynchronous/synchronous, dynamic/static RAM and where are they used?

The two main forms of modern RAM are static RAM (SRAM) and dynamic RAM (DRAM). In SRAM, a bit of data is stored using the state of a six transistor memory cell. This form of RAM is more expensive to produce, but is generally faster and requires less power than DRAM and, in modern computers, is often used as cache memory for the CPU. DRAM stores a bit of data using a transistor and capacitor pair, which together comprise a DRAM memory cell. The capacitor holds a high or low charge (1 or 0, respectively), and the transistor acts as a switch that lets the control circuitry on the chip read the capacitor's state of charge or change it. As this form of memory is less expensive to produce than static RAM, it is the predominant form of computer memory used in modern computers. Dynamic RAM is used to create larger RAM space system, where Static RAM create speed- sensitive cache.

Asynchronous refers to the fact that the memory is not synchronized to the system clock. A memory access is begun, and a certain period of time later the memory value appears on the bus. The signals are not coordinated with the system clock at all. Asynchronous memory works fine in lower-speed memory bus systems but is not nearly as suitable for use in high-speed (>66 MHz) memory systems. A newer type of DRAM, called "synchronous DRAM" or "SDRAM", is synchronized to the system clock; all signals are tied to the clock so timing is much tighter and better controlled. This type of memory is much faster than asynchronous DRAM and can be used to improve the performance of the system. It is more suitable to the higher-speed memory systems of the newest PCs.

What is cache? What is cache coherence?

Cache memory is used to reduce the average memory access times. This is done by storing the data that is frequently accessed in main memory addresses therefore allowing the CPU to access the data faster. This is due to the fact that cache memory can be read a lot faster than main memory.

Cache coherence is the consistency of shared resource data that ends up stored in multiple local caches. When clients in a system maintain caches of a common memory resource, problems may arise with inconsistent data, which is particularly the case with CPUs in a multiprocessing system.

What are differences between resistive and capacitive touchscreen?

Resistive touchscreen needs pressure to work by connecting to sheets of plastic and thus conducting electricity. Capacitive touchscreen works by conducting electricity from user's finger.

Resistive touchscreens rely on the pressure of your fingertip—or any other object—to register input. They consist of two flexible layers with an air gap in-between. In order for the touchscreen to register input, you must press on the top layer using a small amount of pressure, in order to depress the top layer enough to make contact with the bottom layer. The touchscreen will then register the precise location of the touch. You can use anything you want on a resistive touchscreen to make the touch interface work; a gloved finger, a wooden rod, a fingernail – anything that creates enough pressure on the point of impact will activate the mechanism and the touch will be registered.

Capacitive touchscreens instead sense conductivity to register input—usually from the skin on your fingertip. Because you don’t need to apply pressure, capacitive touchscreens are more responsive than resistive touchscreens. However, because they work by sensing conductivity, capacitive touchscreens can only be used with objects that have conductive properties, which includes your fingertip (which is most ideal), and special styluses designed with a conductive tip. This is the reason you cannot use a capacitive screen while wearing gloves – the gloves are not conductive, and the touch does not cause any change in the electrostatic field.

Explain how computer mouse works?

An optical computer mouse uses a light source, typically an LED, and a light detector, such as an array of photodiodes, to detect movement relative to a surface. Modern surface-independent optical mice work by using an optoelectronic sensor (essentially, a tiny low-resolution video camera) to take successive images of the surface on which the mouse operates. The technology underlying the modern optical computer mouse is known as digital image correlation. To understand how optical mice work, imagine two photographs of the same object except slightly offset from each other. Place both photographs on a light table to make them transparent, and slide one across the other until their images line up. The amount that the edges of one photograph overhang the other represents the offset between the images, and in the case of an optical computer mouse the distance it has moved. Optical mice capture one thousand successive images or more per second. Depending on how fast the mouse is moving, each image will be offset from the previous one by a fraction of a pixel or as many as several pixels. Optical mice mathematically process these images using cross correlation to calculate how much each successive image is offset from the previous one. History of computer mouse.

Explain how computer keyboard works?

A keyboard is a lot like a miniature computer. It has its own processor and circuitry that carries information to and from that processor. A large part of this circuitry makes up the key matrix. The key matrix is a grid of circuits underneath the keys. In all keyboards, each circuit is broken at a point below each key. When you press a key, it presses a switch, completing the circuit and allowing a tiny amount of current to flow through. The mechanical action of the switch causes some vibration, called bounce, which the processor filters out. If you press and hold a key, the processor recognizes it as the equivalent of pressing a key repeatedly. When the processor finds a circuit that is closed, it compares the location of that circuit on the key matrix to the character map in its read-only memory (ROM). A character map is basically a comparison chart or lookup table. It tells the processor the position of each key in the matrix and what each keystroke or combination of keystrokes represents. For example, the character map lets the processor know that pressing the a key by itself corresponds to a small letter "a," but the Shift and a keys pressed together correspond to a capital "A."

Explain how cathode ray tube (CRT) based screen technology works and name pros/cons.

The cathode ray tube (CRT) is a vacuum tube containing one or more electron guns, and a phosphorescent screen used to, It has a means to accelerate and deflect the electron beam(s) onto the screen to create the images. The images may represent electrical waveforms (oscilloscope), pictures (television, computer monitor), radar targets or others. CRTs have also been used as memory devices, in which case the visible light emitted from the fluorescent material (if any) is not intended to have significant meaning to a visual observer (though the visible pattern on the tube face may cryptically represent the stored data).

The CRT uses an evacuated glass envelope which is large, deep (i.e. long from front screen face to rear end), fairly heavy, and relatively fragile. As a matter of safety, the face is typically made of thick lead glass so as to be highly shatter-resistant and to block most X-ray emissions, particularly if the CRT is used in a consumer product.

Cons: CRTs, despite recent advances, have remained relatively heavy and bulky and take up a lot of space in comparison to other display technologies. CRT screens have much deeper cabinets compared to flat panels and rear-projection displays for a given screen size, and so it becomes impractical to have CRTs larger than 40 inches (102 cm). The CRT disadvantages became especially significant in light of rapid technological advancements in LCD and plasma flat-panels which allow them to easily surpass 40 inches (102 cm) as well as being thin and wall-mountable, two key features that were increasingly being demanded by consumers.

Pros: CRTs are still popular in the printing and broadcasting industries as well as in the professional video, photography, and graphics fields due to their greater color fidelity, contrast, and better viewing from off-axis (wider viewing angle). CRTs also still find adherents in vintage video gaming because of their higher resolution per initial cost, lowest possible input lag, fast response time, and multiple native resolutions such as 576p.

Explain how liquid crystal displays (LCD) work and name pros/cons.

A liquid-crystal display (LCD) is a flat-panel display or other electronic visual display that uses the light-modulating properties of liquid crystals. Liquid crystals do not emit light directly.

LCDs are available to display arbitrary images (as in a general-purpose computer display) or fixed images with low information content, which can be displayed or hidden, such as preset words, digits, and 7-segment displays as in a digital clock. They use the same basic technology, except that arbitrary images are made up of a large number of small pixels, while other displays have larger elements. LCDs are used in a wide range of applications including computer monitors, televisions, instrument panels, aircraft cockpit displays, and signage.

One feature of liquid crystals is that they're affected by electric current. A particular sort of nematic (have a definite order or pattern) liquid crystal, called twisted nematics (TN), is naturally twisted. Applying an electric current to these liquid crystals will untwist them to varying degrees, depending on the current's voltage. LCDs use these liquid crystals because they react predictably to electric current in such a way as to control light passage.

To create an LCD, you take two pieces of polarized glass. You then add a coating of nematic liquid crystals to one of the filters. The first layer of molecules will align with the filter's orientation. Then add the second piece of glass with the polarizing film at a right angle to the first piece. Each successive layer of TN molecules will gradually twist until the uppermost layer is at a 90-degree angle to the bottom, matching the polarized glass filters.

As light strikes the first filter, it is polarized. The molecules in each layer then guide the light they receive to the next layer. As the light passes through the liquid crystal layers, the molecules also change the light's plane of vibration to match their own angle. When the light reaches the far side of the liquid crystal substance, it vibrates at the same angle as the final layer of molecules. If the final layer is matched up with the second polarized glass filter, then the light will pass through.

If we apply an electric charge to liquid crystal molecules, they untwist. When they straighten out, they change the angle of the light passing through them so that it no longer matches the angle of the top polarizing filter. Consequently, no light can pass through that area of the LCD, which makes that area darker than the surrounding areas.

Pros: They are available in a wider range of screen sizes than CRT and plasma displays, and since they do not use phosphors, they do not suffer image burn-in. The LCD screen is more energy-efficient and can be disposed of more safely than a CRT can. Cons: LCDs are, however, susceptible to image persistence.

Name screen technologies making use of thin film transistor (TFT) technology?

The best known application of thin-film transistors is in TFT LCDs (active-matrix), an implementation of LCD technology. Transistors are embedded within the panel itself, reducing crosstalk between pixels and improving image stability.

As of 2008, many color LCD TVs and monitors use this technology. TFT panels are frequently used in digital radiography applications (medical).

AMOLED (active-matrix organic light-emitting diode) screens also contain a TFT layer (in best smartphones).

Name uses for light polarization filters?

A polarizer or polariser is an optical filter that passes light of a specific polarization and blocks waves of other polarizations. It can convert a beam of light of undefined or mixed polarization into a beam with well-defined polarization, polarized light. Polarizers are used in many optical techniques and instruments, and polarizing filters find applications in photography, liquid crystal display technology and 3D watching. Polarizers can also be made for other types of electromagnetic waves besides light, such as radio waves, microwaves, and X-rays.

What are the benefits of twisted pair cabling and differential signalling?

Twisted pair cabling is a type of wiring in which two conductors of a single circuit are twisted together for the purposes of canceling out electromagnetic interference (EMI) from external sources; for instance, electromagnetic radiation from unshielded twisted pair (UTP) cables, and crosstalk between neighboring pairs.

Differential signaling is a method for electrically transmitting information using two complementary signals. The technique sends the same electrical signal as a differential pair of signals, each in its own conductor. The pair of conductors can be wires (typically twisted together) or traces on a circuit board. The receiving circuit responds to the electrical difference between the two signals, rather than the difference between a single wire and ground. Since the receiving circuit only detects the difference between the wires, the technique resists electromagnetic noise compared to one conductor with an un-paired reference (ground). The technique works for both analog signaling and digital signaling.

Active matrix vs passive matrix in display technology

Active-matrix display : Active-matrix LCDs depend on thin film transistors (TFT). Basically, TFTs are tiny switching transistors and capacitors. They are arranged in a matrix on a glass substrate. To address a particular pixel, the proper row is switched on, and then a charge is sent down the correct column. Since all of the other rows that the column intersects are turned off, only the capacitor at the designated pixel receives a charge. The capacitor is able to hold the charge until the next refresh cycle. And if we carefully control the amount of voltage supplied to a crystal, we can make it untwist only enough to allow some light through. It displays high-quality colour that is viewable from all angles and reduces crosstalk between pixels and improving image stability.

Passive-matrix display : it uses a simple grid to supply the charge to a particular pixel on the display. The rows or columns are connected to integrated circuits that control when a charge is sent down a particular column or row. To turn on a pixel, the integrated circuit sends a charge down the correct column of one substrate and a ground activated on the correct row of the other. The row and column intersect at the designated pixel, and that delivers the voltage to untwist the liquid crystals at that pixel. The simplicity of the passive-matrix system is beautiful, but it has significant drawbacks, notably slow response time and imprecise voltage control. Response time refers to the LCD's ability to refresh the image displayed. The easiest way to observe slow response time in a passive-matrix LCD is to move the mouse pointer quickly from one side of the screen to the other. You will notice a series of "ghosts" following the pointer. Imprecise voltage control hinders the passive matrix's ability to influence only one pixel at a time. When voltage is applied to untwist one pixel, the pixels around it also partially untwist, which makes images appear fuzzy and lacking in contrast.

Storage abstractions

What is a block device?

In computing (specifically data transmission and data storage), a block, sometimes called a physical record, is a sequence of bytes or bits, usually containing some whole number of records, having a maximum length, a block size.Data thus structured are said to be blocked. The process of putting data into blocks is called blocking. Most file systems are based on a block device, which is a level of abstraction for the hardware responsible for storing and retrieving specified blocks of data

What is logical block addressing and what are the benefits compared to older cylinder-head-sector addressing method in terms of harddisks?

Logical block addressing (LBA) is a common scheme used for specifying the location of blocks of data stored on computer storage devices, generally secondary storage systems such as hard disk drives. LBA is a particularly simple linear addressing scheme; blocks are located by an integer index, with the first block being LBA 0, the second LBA 1, and so on.

Cylinder-head-sector, also known as CHS, is an early method for giving addresses to each physical block of data on a hard disk drive. CHS addressing is the process of identifying individual sectors on a disk by their position in a track, where the track is determined by the head and cylinder numbers.

LBA allows a maximum addressing capacity of 2TB although CHS allows 8GB

What is a disk partition?

Disk partitioning is the creation of one or more regions on a hard disk or other secondary storage, so that an operating system can manage information in each region separately. Partitioning is typically the first step of preparing a newly manufactured disk, before any files or directories have been created. The disk stores the information about the partitions' locations and sizes in an area known as the partition table that the operating system reads before any other part of the disk. Each partition then appears in the operating system as a distinct "logical" disk that uses part of the actual disk.

What is a file system?

In computing, a file system (or filesystem) is used to control how data is stored and retrieved. Without a file system, information placed in a storage area would be one large body of data with no way to tell where one piece of information stops and the next begins. By separating the data into individual pieces, and giving each piece a name, the information is easily separated and identified. Taking its name from the way paper-based information systems are named, each group of data is called a "file". The structure and logic rules used to manage the groups of information and their names is called a "file system".

What is journaling in terms of filesystems and what are the benefits? Name some journaled file systems in use nowadays.

A journaling file system is a file system that keeps track of changes not yet committed to the file system's main part by recording the intentions of such changes in a data structure known as a "journal", which is usually a circular log. In the event of a system crash or power failure, such file systems can be brought back online quicker with lower likelihood of becoming corrupted.

Depending on the actual implementation, a journaling file system may only keep track of stored metadata, resulting in improved performance at the expense of increased possibility for data corruption. Alternatively, a journaling file system may track both stored data and related metadata, while some implementations allow selectable behavior in this regard.

Modern journaling: HFS+, NTFS, ext4, Btrfs, ZFS

Bootloaders, kernels

What is the role of BIOS/UEFI in x86-based machines?

BIOS (Basic Input/Output System) performs during boot up process of a computer and prepares it for the OS and its' programs.

UEFI (Unified Extensible Firmware Interface) is a replacement for BIOS. It offers several advantages over previous firmware interface, like:

- Ability to boot from large disks (over 2 TB) with a GUID Partition Table (GPT)

- CPU-independent architecture

- CPU-independent drivers

- Flexible pre-OS environment, including network capability

- Modular design

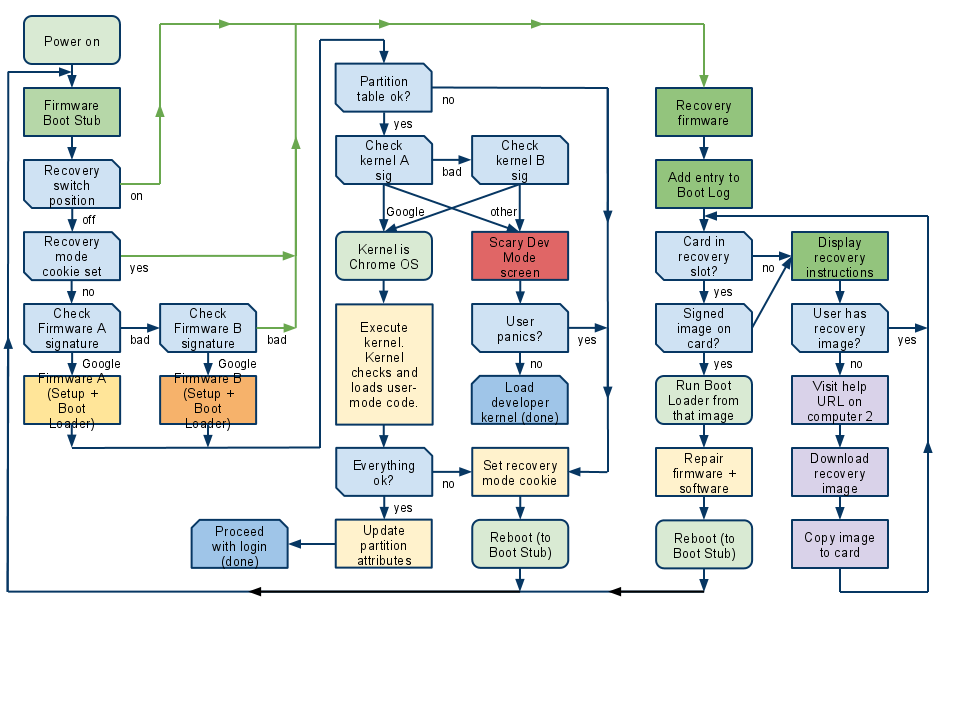

Explain step by step how operating system is booted up, see slides for flowchart.

1. The first thing a computer has to do when it is turned on is start up a special program called an operating system. That process is called booting. The instructions for booting are built into BIOS (Basic input/output system).

2. The BIOS will tell your computer to look into the boot disk (usually the lowest-numbered hard disk) for a boot loader and the boot loader will be pulled into memory and will be started.

3. The boot loader's job is to start the real operating system and it does it by looking for a kernel, loading it into memory and starting it.

5. Once the kernel starts, it has to look around to find the rest of the hardware and get ready to run programs ( It does this by poking not at ordinary memory locations but rather at I/O ports — special bus addresses that are likely to have device controller cards listening at them for commands). The kernel doesn't poke at random; it has a lot of built-in knowledge about what it's likely to find where, and how controllers will respond if they're present. This process is called autoprobing.

6. After the kernel is up and running, it's the end of first stage. After the first stage, the kernel hands control to a special program called "init" which spawns housekeeping processes. The init's process's first task is to look that your hard disks are okay

7. Init's next step is to start several daemons. A daemon is a program like a print spooler, a mail listener or a WWW server that lurks in the background, waiting for things to do. These special programs often have to coordinate several requests that could conflict. They are daemons because it's often easier to write one program that runs constantly and knows about all requests than it would be to try to make sure that a flock of copies (each processing one request and all running at the same time) don't step on each other.

8. The next step is to prepare for users. Init starts a copy of a program called getty to watch your screen and keyboard (and maybe more copies to watch dial-in serial ports).

9. The next step is to start up various daemons that support networking and other services. The most important of these is your X server. X is a daemon that manages your display, keyboard, and mouse. Its main job is to produce the color pixel graphics you normally see on your screen.

10. When the X server comes up, during the last part of your machine's boot process, it effectively takes over the hardware from whatever virtual console was previously in control. That's when you'll see a graphical login screen, produced for you by a program called a display manager.

BIOS

- Basic input and output system

- Located at memory location 0xFFFF0

- Boot firmware designed to be run at startup

- POST- power on self test

- Run-time service

initial configuration selects which device to boot from

- Alternatively, Extensible Firmware Interface (EFI)

Describe the functionality provided by general purpose operating system.

An operating system (OS) is system software that manages computer hardware and software resources and provides common services for computer programs. The operating system is a component of the system software in a computer system. Application programs usually require an operating system to function.

Time-sharing operating systems schedule tasks for efficient use of the system and may also include accounting software for cost allocation of processor time, mass storage, printing, and other resources.

For hardware functions such as input and output and memory allocation, the operating system acts as an intermediary between programs and the computer hardware, although the application code is usually executed directly by the hardware and frequently makes system calls to an OS function or is interrupted by it. Operating systems are found on many devices that contain a computer—from cellular phones and video game consoles to web servers and supercomputers.

See architecture of Windows NT, Android, OS X.

What are the main differences between real mode and protected mode of x86-based processor?

Protected mode differed from the original mode of the 8086, which was later dubbed “real mode”, in that areas of memory could be physically isolated by the processor itself to prevent illegal writes to other programs running in memory at the same time. Prior to protected mode, multiple programs could be running in memory at the same time, but any program could access any area of memory and, therefore, if malicious or errant, for example, could take down the entire system. The 80286 introduced the protected mode to isolate that possibility by allowing the operating system (OS) to dictate where each program should run.

What happens during context switch?

During a context switch, the kernel will save the context of the old process in its PCB and then load the saved context of the new process scheduled to run.

What is the purpose of paged virtual memory?

Virtual memory is a memory management technique that is implemented using both hardware and software. It maps memory addresses used by a program, called virtual addresses, into physical addresses in computer memory. Main storage as seen by a process or task appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit or MMU, automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities to provide a virtual address space that can exceed the capacity of real memory and thus reference more memory than is physically present in the computer.

The primary benefits of virtual memory include freeing applications from having to manage a shared memory space, increased security due to memory isolation, and being able to conceptually use more memory than might be physically available, using the technique of paging.

Paged virtual memory: nearly all implementations of virtual memory divide a virtual address space into pages, blocks of contiguous virtual memory addresses. Pages on contemporary[NB 2] systems are usually at least 4 kilobytes in size; systems with large virtual address ranges or amounts of real memory generally use larger page sizes.

use cat /proc/meminfo to see the virtual memory.

Libraries, frameworks

OS provides libraries

- Render fonts: libfreetype

- Render user interface: GTK, Qt, Cocoa, MFC

- Playback MP3-s: libmad, Gstreamer,

- Encrypt connections: OpenSSL, LibreSSL, etc.

- Database functionality: SQLite

- Web browsing: WebKit

- 3D graphics: OpenGL, DirectX

- Runtime environments: Java VM, .NET

OS provides services

- IPC (Inter-process communication) aka send messages between apps

- Window management

- Cache geolocation information

- Manage phonecalls

- Network management

- Framework ≃ Bunch of libraries + some services

Programming languages

What are the major steps of compilation?

1. Lexer

The first phase of scanner works as a text scanner. This phase scans the source code as a stream of characters and converts it into meaningful lexemes. Lexical analyser represents these lexemes in the form of tokens as: <token-name, attribute-value>

2. Parser

The next phase is called the syntax analysis or parsing. It takes the token produced by lexical analysis as input and generates a parse tree (or syntax tree). In this phase, token arrangements are checked against the source code grammar, i.e. the parser checks if the expression made by the tokens is syntactically correct.

3. Type checker

Semantic analysis checks whether the parse tree constructed follows the rules of language. For example, assignment of values is between compatible data types, and adding string to an integer. Also, the semantic analyser keeps track of identifiers, their types and expressions; whether identifiers are declared before use or not etc. The semantic analyser produces an annotated syntax tree as an output and an intermediate code is produced.

4. Optimizer

The next phase does code optimization of the intermediate code. Optimization can be assumed as something that removes unnecessary code lines, and arranges the sequence of statements in order to speed up the program execution without wasting resources (CPU, memory).

5. Code generator

In this phase, the code generator takes the optimized representation of the intermediate code and maps it to the target machine language. The code generator translates the intermediate code into a sequence of (generally) re-locatable machine code. Sequence of instructions of machine code performs the task as the intermediate code would do.

nmap 192.168.12.1-254 -p22,80

What are the differences between interpreted, JIT-compilation and traditional compiling?

Interpreted language

An interpreted language is a programming language for which most of its implementations execute instructions directly, without previously compiling a program into machine-language instructions. The interpreter executes the program directly, translating each statement into a sequence of one or more subroutines already compiled into machine code. Example: JavaScript

Just-in-time compilation

In computing, just-in-time (JIT) compilation, also known as dynamic translation, is compilation done during execution of a program – at run time – rather than prior to execution. Most often this consists of translation to machine code, which is then executed directly, but can also refer to translation to another format.

Compiler

A compiler is a computer program (or a set of programs) that transforms source code written in a programming language (the source language) into another computer language (the target language), with the latter often having a binary form known as object code. The most common reason for converting source code is to create an executable program.

What is control flow? Loops? Conditional statements?

Flow control In computer science, control flow (or alternatively, flow of control) is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an imperative programming language from a declarative programming language. Within an imperative programming language, a control flow statement is a statement whose execution results in a choice being made as to which of two or more paths should be followed. For non-strict functional languages, functions and language constructs exist to achieve the same result, but they are not necessarily called control flow statements.

The kinds of control flow statements supported by different languages vary, but can be categorized by their effect:

- continuation at a different statement (unconditional branch or jump),

- executing a set of statements only if some condition is met (choice - i.e., conditional branch),

- executing a set of statements zero or more times, until some condition is met (i.e., loop - the same as conditional branch),

- executing a set of distant statements, after which the flow of control usually returns (subroutines, coroutines, and continuations),

- stopping the program, preventing any further execution (unconditional halt).

Count-controlled loops

Most programming languages have constructions for repeating a loop a certain number of times. Note that if N is less than 1 in these examples then the language may specify that the body is skipped completely, or that the body is executed just once with N = 1. In most cases counting can go downwards instead of upwards and step sizes other than 1 can be used.

FOR I = 1 TO N | for I := 1 to N do begin

xxx | xxx

NEXT I | end;

DO I = 1,N | for ( I=1; I<=N; ++I ) {

xxx | xxx

END DO | }

In many programming languages, only integers can be reliably used in a count-controlled loop. Floating-point numbers are represented imprecisely due to hardware constraints, so a loop such as

Data encoding

What is bit? Nibble? Byte? Word?

A bit is the basic unit of information in computing and digital communications. A bit can have only one of two values, and may therefore be physically implemented with a two-state device. These values are most commonly represented as either a 0 or 1. The two values can also be interpreted as logical values (true/false, yes/no), algebraic signs (+/−), activation states (on/off), or any other two-valued attribute.

Nibble is half of an octet. A nibble has sixteen possible values.

Byte is a unit of eight bits. Comes from the number of bits used to encode a single character of text in a computer. The term byte initially meant 'the smallest addressable unit of memory'. In the past, 5-, 6-, 7-, 8-, and 9-bit bytes have all been used. The term byte was usually not used at all in connection with bit- and word-addressed machines. The term octet always refers to an 8-bit quantity. It is mostly used in the field of computer networking, where computers with different byte widths might have to communicate. In modern usage byte almost invariably means eight bits, since all other sizes have fallen into disuse; thus byte has come to be synonymous with octet.

The term 'word' is used for a small group of bits which are handled simultaneously by processors of a particular architecture. The size of a word is thus CPU-specific. The size of a word is reflected in many aspects of a computer's structure and operation; the majority of the registers in a processor are usually word sized and the largest piece of data that can be transferred to and from the working memory in a single operation is a word in many (not all) architectures. Modern general purpose computers usually use 32 or 64 bits.

Write 9375 in binary, hexadecimal?

Binary or base two counting system starts from right with 0 and continues left with each step being to the power of 2.

| 2^14 | 2^13 | 2^12 | 2^11 | 2^10 | 2^9 | 2^8 | 2^7 | 2^6 | 2^5 | 2^4 | 2^3 | 2^2 | 2^1 | 2^0 |

| 16384 | 8192 | 4096 | 2048 | 1024 | 512 | 256 | 128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 |

| 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

To find out the decimal number (9375) in binary, look if it contains the number equal or smaller. 8192 is smaller than 9375, mark down 1. 9375-8192=1183 contains 1024, mark 1, etc. Number 9375 base ten is 0x10010010011111 in binary, where the '0b' represents base two. From binary to decimal conversion works the other way around. If perplexed, see the explanatory video on Khan academy.

Hexadecimal or base 16 system goes from 0 until 9, then starts with A (10 base 10) until F (15 base 10).

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | A | B | C | D | E | F |

Conversion from base two to hex is similar to decimal to base two conversion. First, we figure out the multiples of 16:

| 16^0 | 16^1 | 16^2 | 16^3 | 16^4 |

| 1 | 16 | 256 | 4096 | 65 536 |

| F | 9 | 4 | 2 | <- |

9375 has two multiples of 4096 (9375 - 2*4096 = 9375 - 8192 = 1183), 1183 has 4 multiples of 256 (1183 - 4*256 = 159), 159 has 159 - 9*16 = 15 (15 in decimal is F in hex) and we come to 249F. As with base 2, you can convert this way from hex to decimal. If still don't get it, watch a video on Khan academy.

Write 0xDEADBEEF in decimal?

Following the table above we calculate, 13x16^7 + 14x16^6 + 10x16^5 + 13x16^4 + 11x16^3 + 14x16^2 + 14x16^1 + 15x16^0 = A BIG NUMBER! (3735928559)

What is quantization in terms of signal processing?

Quantization, in mathematics and digital signal processing, is the process of mapping a large set of input values to a (countable) smaller set. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms. The difference between an input value and its quantized value (such as round-off error) is referred to as quantization error. A device or algorithmic function that performs quantization is called a quantizer. An analog-to-digital converter is an example of a quantizer.

How are integers stored in binary? What integer range can be described using n bits? How many bits are required to describe integer range from n .. m.

The value of an item with an integral type is the mathematical integer that it corresponds to. Integral types may be unsigned (capable of representing only non-negative integers) or signed (capable of representing negative integers as well).

The most common representation of a positive integer is a string of bits, using the binary numeral system. The order of the memory bytes storing the bits varies; see endianness. The width or precision of an integral type is the number of bits in its representation. An integral type with n bits can encode 2n numbers.

There are four well-known ways to represent signed numbers in a binary computing system. The most common is two's complement, which allows a signed integral type with n bits to represent numbers from −2(n−1) through 2(n−1)−1.

How are single precision and double precision floating point numbers stored in binary according to IEEE754 standard?

What is the difference between CMYK and RGB color models? How are YUV, HSV and HSL colorspaces related to RGB? What are sRGB and YCbCr and where are they used?

CMYK Color Mode

If printers are using a digital printing method, they would print color on paper using CMYK colors. This is a four color mode that utilizes the colors cyan, magenta, yellow and black in various amounts to create all of the necessary colors when printing images. It is a subtractive process which, from a white background, adding a unique color means more light is removed, or absorbed, to create new colors. When the first three colors are added together, the result is not pure black, but rather a very dark brown. The K color, or black, is used to completely remove light from the printed picture, which is why the eye perceives the color as black.

RGB Color Mode

RGB is the color scheme that is associated with electronic displays, such as CRT, LCD monitors, digital cameras and scanners. It is an additive type of color mode that, from a black background, combines the primary colors, red, green and blue, in various degrees to create a variety of different colors. When all three of the colors are combined and displayed to their full extent, the result is a pure white. When all three colors are combined to the lowest degree, or value, the result is black. Software such as photo editing programs use the RGB color mode because it offers the widest range of colors.

YUV, HSV, HSL

The Y'UV model defines a color space in terms of one luma (Y') and two chrominance (UV) components. The Y'UV color model is used in the PAL and SECAM composite color video standards.YUV models human perception of color more closely than the standard RGB model used in computer graphics hardware. Y' stands for the luma component (the brightness) and U and V are the chrominance (color) components.

HSV (hue, saturation, value), is often used by artists because it is often more natural to think about a color in terms of hue and saturation than in terms of additive or subtractive color components. HSL (hue, saturation, lightness/luminance), also known as HSL, HSI (hue, saturation, intensity) or HSD (hue, saturation, darkness), is quite similar to HSV, with "lightness" replacing "brightness". HSV and HSL are a transformation of an RGB colorspace, and its components and colorimetry are relative to the RGB colorspace from which it was derived.

sRGB and YCbCr

sRGB is pretty much the default color space everywhere you look. This means that most browsers, applications, and devices are designed to work with sRGB, and assume that images are in the sRGB color space. In fact, most browser simply ignore the embedded color space information in images and render them as sRGB images. If you publish your images on the web, you should always save and publish them as sRGB.

YCbCr is one of two primary color spaces used to represent digital component video (the other is RGB). The difference between YCbCr and RGB is that YCbCr represents color as brightness and two color difference signals, while RGB represents color as red, green and blue. In YCbCr, the Y is the brightness (luma), Cb is blue minus luma (B-Y) and Cr is red minus luma (R-Y). MPEG compression, which is used in DVDs, digital TV and Video CDs, is coded in YCbCr.

How is data encoded on audio CD-s? What is the capacity of an audio CD?

There are 2 channels (left and right). The amplitude of the voltage sound level is encoded with 16 bits per channel (or 2 bytes). The sampling rate is how often this amplitude is measured: 44.1kHz (44100 times a second). The CD had a specification to hold 80 minutes of music (4800 seconds).

Capacity of a audio CD in bytes = 2 channels x 2 bytes (16 bites) x 44100 Hz x 4800 seconds = 846720000 bytes => 846 Mbytes

What is sampling rate? What is bit depth? What is resolution?

SAMPLE RATE: Sample rate is the number of samples of audio carried per second, measured in Hz or kHz (one kHz being 1 000 Hz). For example, 44 100 samples per second can be expressed as either 44 100 Hz, or 44.1 kHz. Bandwidth is the difference between the highest and lowest frequencies carried in an audio stream

BIT DEPTH: Bit depth refers to the color information stored in an image. The higher the bit depth of an image, the more colors it can store. The simplest image, a 1 bit image, can only show two colors, black and white

RESOLUTION: Resolution is the number of pixels (individual points of color) contained on a display monitor, expressed in terms of the number of pixels on the horizontal axis and the number on the vertical axis. The sharpness of the image on a display depends on the resolution and the size of the monitor.

What is bitrate?

Bitrate is the number of bits that are conveyed or processed per unit of time.

The bit rate is quantified using the bits per second unit (symbol: "bit/s"), often in conjunction with an SI prefix such as "kilo" (1 kbit/s = 1000 bit/s), "mega" (1 Mbit/s = 1000 kbit/s), "giga" (1 Gbit/s = 1000 Mbit/s) or "tera" (1 Tbit/s = 1000 Gbit/s).[2] The non-standard abbreviation "bps" is often used to replace the standard symbol "bit/s", so that, for example, "1 Mbps" is used to mean one million bits per second.

One byte per second (1 B/s) corresponds to 8 bit/s.

What is lossy/lossless compression?

Lossless and lossy compression are terms that describe whether or not, in the compression of a file, all original data can be recovered when the file is uncompressed. With lossless compression, every single bit of data that was originally in the file remains after the file is uncompressed.

What is JPEG suitable for? Is JPEG lossy or lossless compression method?

JPEG is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and image quality. JPEG typically achieves 10:1 compression with little perceptible loss in image quality. JPEG compression is used in a number of image file formats. JPEG/Exif is the most common image format used by digital cameras and other photographic image capture devices; along with JPEG/JFIF, it is the most common format for storing and transmitting photographic images on the World Wide Web. These format variations are often not distinguished, and are simply called JPEG.

What is PNG suitable for? Does PNG support compression?

Portable Network Graphics is a raster graphics file format that supports lossless data compression. PNG was created as an improved, non-patented replacement for Graphics Interchange Format (GIF), and is the most used lossless image compression format on the Internet.

How are time domain and frequency domain related in terms of signal processing? What is Fourier transform and where it is applied?

Code execution in processor

Given ~10 instructions and their explainations, follow the instructions and elaborate after every step what happened in the processor?

Microcontrollers

What distinguishes microcontroller from microprocessor?

| MICROPROCESSOR | MICRO CONTROLLER |

| Microprocessor is heart of Computer system. | Micro Controller is a heart of embedded system. |

| It is just a processor. Memory and I/O components have to be connected externally | Micro controller has external processor along with internal memory and i/O components |

| Since memory and I/O has to be connected externally, the circuit becomes large. | Since memory and I/O are present internally, the circuit is small. |

| Cannot be used in compact systems and hence inefficient | Can be used in compact systems and hence it is an efficient technique |

| Cost of the entire system increases | Cost of the entire system is low |

| Due to external components, the entire power consumption is high. Hence it is not suitable to used with devices running on stored power like batteries. | Since external components are low, total power consumption is less and can be used with devices running on stored power like batteries. |

| Most of the microprocessors do not have power saving features. | Most of the micro controllers have power saving modes like idle mode and power saving mode. This helps to reduce power consumption even further. |

| Since memory and I/O components are all external, each instruction will need external operation, hence it is relatively slower. | Since components are internal, most of the operations are internal instruction, hence speed is fast. |

| Microprocessor have less number of registers, hence more operations are memory based. | Micro controller have more number of registers, hence the programs are easier to write. |

| Microprocessors are based on von Neumann model/architecture where program and data are stored in same memory module | Micro controllers are based on Harvard architecture where program memory and Data memory are separate |

| Mainly used in personal computers | Used mainly in washing machine, MP3 players |

What are the differences between Harvard architecture and von Neumann architecture?

Difference of von Neumann architecture from Harvard is that von Neumann can do a single operation at a time -- it cannot write while reading an instruction. A property that a computer with Harvard architecture can do.

What is an interrupt?

Interrupt is a signal that there is something that requires immediate attention from the processing unit. Processor suspends its current activities, saves its state, deals with the temporary interrupt and returns itself to the previous state.

What is an timer?

Timer is a line of code that tracks the passage of time based on the clock oscillator which is built in to the hardware where the software is running.

Introduction to Boole algebra

Simplify A AND A OR B

Show addition of X and Y in binary

Two's Complement Addition

Add the values and discard any carry-out bit.

Examples: using 8-bit two’s complement numbers.

Add −8 to +3 (+3) 0000 0011 +(−8) 1111 1000 ----------------- (−5) 1111 1011

Add −5 to −2 (−2) 1111 1110 +(−5) 1111 1011 ----------------- (−7) 1 1111 1001 : discard carry-out

Show subtraction of X and Y in binary

Two's Complement Subtraction

Normally accomplished by negating the subtrahend and adding it to the minuhend. Any carry-out is discarded.

Example: Using 8-bit Two's Complement Numbers (−128 ≤ x ≤ +127)

(+8) 0000 1000 0000 1000 −(+5) 0000 0101 -> Negate -> +1111 1011 ----- ----------- (+3) 1 0000 0011 : discard carry-out

Show multiplication of X and Y in binary

Multiplication Example 11001012 × 1111012 (10110 × 6110)

1100101 10110

× 111101 × 6110

-------

1100101

+1100101

+1100101

+1100101

+1100101

-------------

?????????????

-------------

Easier to use intermediary results:

1100101 10110

× 111101 × 6110

-------

1100101

+1100101

----------

111111001

+1100101

------------

10100100001

+1100101

-------------

101101110001

+1100101

-------------

1100000010001 = 409610 + 204810 + 1610 + 1 = 616110

-------------

Hardware description languages

What are the uses for hardware description languages?

In electronics, a hardware description language (HDL) is a specialized computer language used to program the structure, design and operation of electronic circuits, and most commonly, digital logic circuits.

A hardware description language enables a precise, formal description of an electronic circuit that allows for the automated analysis, simulation, and simulated testing of an electronic circuit. It also allows for the compilation of an HDL program into a lower level specification of physical electronic components, such as the set of masks used to create an integrated circuit.

A hardware description language looks much like a programming language such as C; it is a textual description consisting of expressions, statements and control structures. One important difference between most programming languages and HDLs is that HDLs explicitly include the notion of time.

What is latch?

A latch is an example of a bistable multivibrator, that is, a device with exactly two stable states. These states are high-output and low-output. A latch has a feedback path, so information can be retained by the device. Therefore latches can be memory devices, and can store one bit of data for as long as the device is powered. As the name suggests, latches are used to "latch onto" information and hold in place. Latches are very similar to flip-flops, but are not synchronous devices, and do not operate on clock edges as flip-flops do.

What is flip-flop?

A flip-flop is a device very like a latch in that it is a bistable multivibrator, having two states and a feedback path that allows it to store a bit of information. The difference between a latch and a flip-flop is that a latch is asynchronous, and the outputs can change as soon as the inputs do (or at least after a small propagation delay). A flip-flop, on the other hand, is edge-triggered and only changes state when a control signal goes from high to low or low to high. This distinction is relatively recent and is not formal, with many authorities still referring to flip-flops as latches and vice versa, but it is a helpful distinction to make for the sake of clarity.

There are several different types of flip-flop each with its own uses and peculiarities. The four main types of flip-flop are : SR, JK, D, and T.

What is mux (multiplexer)?

A multiplexer (or mux) is a device that selects one of several analog or digital input signals and forwards the selected input into a single line.[1] A multiplexer of 2n inputs has n select lines, which are used to select which input line to send to the output.[2] Multiplexers are mainly used to increase the amount of data that can be sent over the network within a certain amount of time and bandwidth.[1] A multiplexer is also called a data selector.

An electronic multiplexer makes it possible for several signals to share one device or resource, for example one A/D converter or one communication line, instead of having one device per input signal.

What is register? Register file?

Registers are a special, high-speed storage area within the CPU. All data must be represented in a register before it can be processed. For example, if two numbers are to be multiplied, both numbers must be in registers, and the result is also placed in a register. A register may hold a computer instruction , a storage address, or any kind of data (such as a bit sequence or individual characters). A register must be large enough to hold an instruction - for example, in a 32-bit instruction computer, a register must be 32 bits in length. In some computer designs, there are smaller registers - for example, half-registers - for shorter instructions. Depending on the processor design and language rules, registers may be numbered or have arbitrary names.

A register file is an array of processor registers in a central processing unit (CPU). Modern integrated circuit-based register files are usually implemented by way of fast static RAMs with multiple ports. Such RAMs are distinguished by having dedicated read and write ports, whereas ordinary multiported SRAMs will usually read and write through the same ports.

The instruction set architecture of a CPU will almost always define a set of registers which are used to stage data between memory and the functional units on the chip. In simpler CPUs, these architectural registers correspond one-for-one to the entries in a physical register file within the CPU. More complicated CPUs use register renaming, so that the mapping of which physical entry stores a particular architectural register changes dynamically during execution. The register file is part of the architecture and visible to the programmer, as opposed to the concept of transparent caches.

What is ALU?

An arithmetic logic unit (ALU) is a digital electronic circuit that performs arithmetic and bitwise logical operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on floating point numbers. An ALU is a fundamental building block of many types of computing circuits, including the central processing unit (CPU) of computers, FPUs, and graphics processing units (GPUs). A single CPU, FPU or GPU may contain multiple ALUs.

The inputs to an ALU are the data to be operated on, called operands, and a code indicating the operation to be performed; the ALU's output is the result of the performed operation. In many designs, the ALU also exchanges additional information with a status register, which relates to the result of the current or previous operations.

What is floating-point unit?

A floating-point unit (FPU) is a part of a computer system specially designed to carry out operations on floating point numbers. Typical operations are addition, subtraction, multiplication, division, square root, and bitshifting. Some systems (particularly older, microcode-based architectures) can also perform various transcendental functions such as exponential or trigonometric calculations, though in most modern processors these are done with software library routines.

In general purpose computer architectures, one or more FPUs may be integrated with the central processing unit; however many embedded processors do not have hardware support for floating-point operations.

What is a cache?

Clearing Computer Cache. The cache (pronounced "cash") is a space in your computer's hard drive and in RAM memory where your browser saves copies of previously visited Web pages. Your browser uses the cache like a short-term memory

What is a bus?

In computer architecture, a bus (related to the Latin "omnibus", meaning "for all") is a communication system that transfers data between components inside a computer, or between computers.

Show the circuit diagram for A OR B AND C, NOT A AND B, <insert some other Boole formula here>?

Show the truth table for <insert Boole formula here>?

Logical Conjunction(AND)

| p | q | p ∧ q |

| T | T | T |

| T | F | F |

| F | T | F |

| F | F | F |

Logical Disjunction(OR)

| p | q | p ∨ q |

| T | T | T |

| T | F | T |

| F | T | T |

| F | F | F |

Logical NAND

| p | q | p ↑ q |

| T | T | F |

| T | F | T |

| F | T | T |

| F | F | T |

Logical NOR

| p | q | p ↓ q |

| T | T | F |

| T | F | F |

| F | T | F |

| F | F | T |

Write the equivalent Boole formula of a circuit diagram.

Publishing work

What are the major implications of MIT, BSD and GPL licenses?

The MIT License is a free software license originating at the Massachusetts Institute of Technology (MIT). It is a permissive free software license, meaning that it puts only very limited restriction on reuse and has therefore an excellent license compatibility. The MIT license permits reuse within proprietary software provided all copies of the licensed software include a copy of the MIT License terms and the copyright notice. The MIT license is also compatible with many copyleft licenses as the GPL; MIT licensed software can be integrated into GPL software, but not the other way around. While the MIT license was always an important and often used license in the FOSS domain, in 2015 according to Black Duck Software and GitHub data, it became the most popular license before GPL variants and other FOSS licenses. Notable software packages that use one of the versions of the MIT License include Expat, the Mono development platform class libraries, Ruby on Rails, Node.js, jQuery and the X Window System, for which the license was written.

BSD licenses are a family of permissive free software licenses, imposing minimal restrictions on the redistribution of covered software. They have max 4 articles + header and footer.

- Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

- Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

- Neither the name of the <organization> nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

The GNU General Public License (GNU GPL or GPL) is a widely used free software license, which guarantees end users (individuals, organizations, companies) the freedoms to run, study, share (copy), and modify the software. Software that allows these rights is called free software and, if the software is copylefted, requires those rights to be retained. The GPL demands both. The license was originally written by Richard Stallman of the Free Software Foundation (FSF) for the GNU Project. In other words, the GPL grants the recipients of a computer program the rights of the Free Software Definition and uses copyleft to ensure the freedoms are preserved whenever the work is distributed, even when the work is changed or added to. The GPL is a copyleft license, which means that derived works can only be distributed under the same license terms. This is in distinction to permissive free software licenses, of which the BSD licenses and the MIT License are the standard examples. GPL was the first copyleft license for general use.

What are the differences between copyright, trademark, trade secret?

“Intellectual property is something that is created by the mind.” Typically, we think of ideas as being created by the mind – but intellectual property does not protect bare ideas: rather, it is the expression or symbolic power/recognizability of the ideas that are protected. Thus, it is the design of the rocket that is patented, not the idea of a rocket. It is the painting of the lake that is copyrighted, not the idea of a lake. And it is the consumer recognizable logo that is trademarked, not the idea of a logo. Intellectual property protects how we express and identify ideas in concrete ways – not the idea itself.

In particular:

Patents: protect functional expressions of an idea – not the idea itself. A machines, method/process, manufacture, compositions of matter, and improvements of any of these items can be patented. Thus, I can patent a design for the nozzle on a rocket, or the method of making the rocket, or the method of making the rocket fuel, or the metal in which the rocket fuel is stored, or a new way of transporting the rocket fuel to the rocket. But I cannot patent the broad “idea” of a rocket.

Copyrights: protect the specific creative expression of an idea through any medium of artistic/creative expression – i.e., paintings, photographs, sculpture, writings, software, etc. A copyright protects your painting of a haystack, but it would not prohibit another painter from expressing their artistry or viewpoint by also painting a haystack. Likewise, while Ian Fleming was able to receive a copyright on his particular expression of the idea of a secret agent (i.e., a debonair English secret agent), he could not prevent Rich Wilkes from receiving a copyright on his expression of the idea of a secret agent (i.e., a tattooed bald extreme athlete turned reluctant secret agent).

Trademarks: protect any symbol that indicates the source or origin of the goods or services to which it is affixed. While a trademark can be extremely valuable to its owner, the ultimate purpose of a trademark is to protect consumers – that is, the function of a trademark is to inform the consumer where the goods or services originate. The consumer, knowing the origin of the goods, can make purchasing decisions based on prior knowledge, reputation or marketing.

Trade secret: is a formula, practice, process, design, instrument, pattern, commercial method, or compilation of information which is not generally known or reasonably ascertainable by others, and by which a business can obtain an economic advantage over competitors or customers.[

While each category is distinct, a product (or components/aspects of a product) may fall into one or more of the categories. For example, software can be protected by both patents and copyrights. The copyright would protect the artistic expression of the idea – i.e., the code itself – while the patent would protect the functional expression of the idea – e.g., using a single click to purchase a book online. Likewise, it is likely that the software company will use a trademark to indicate who made the software.

An additional example would be a logo for a company. The logo may serve as a trademark indicating that all products affixed with the logo are from the same source. The creative and artistic aspects of the logo may also be protected by a copyright.

Where would you use waterfall software development model? Where would you use agile?

What is the purpose of a version control system?

Why Use a Version Control System?

There are many benefits of using a version control system for your projects. This chapter explains some of them in detail.

Collaboration

Without a VCS in place, you're probably working together in a shared folder on the same set of files. Shouting through the office that you are currently working on file "xyz" and that, meanwhile, your teammates should keep their fingers off is not an acceptable workflow. It's extremely error-prone as you're essentially doing open-heart surgery all the time: sooner or later, someone will overwrite someone else's changes.

With a VCS, everybody on the team is able to work absolutely freely - on any file at any time. The VCS will later allow you to merge all the changes into a common version. There's no question where the latest version of a file or the whole project is. It's in a common, central place: your version control system.

Other benefits of using a VCS are even independent of working in a team or on your own.

Storing Versions (Properly)

Saving a version of your project after making changes is an essential habit. But without a VCS, this becomes tedious and confusing very quickly:

How much do you save? Only the changed files or the complete project? In the first case, you'll have a hard time viewing the complete project at any point in time - in the latter case, you'll have huge amounts of unnecessary data lying on your hard drive. How do you name these versions? If you're a very organized person, you might be able to stick to an actually comprehensible naming scheme (if you're happy with "acme-inc-redesign-2013-11-12-v23"). However, as soon as it comes to variants (say, you need to prepare one version with the header area and one without it), chances are good you'll eventually lose track. The most important question, however, is probably this one: How do you know what exactly is different in these versions? Very few people actually take the time to carefully document each important change and include this in a README file in the project folder. A version control system acknowledges that there is only one project. Therefore, there's only the one version on your disk that you're currently working on. Everything else - all the past versions and variants - are neatly packed up inside the VCS. When you need it, you can request any version at any time and you'll have a snapshot of the complete project right at hand.

Restoring Previous Versions

Being able to restore older versions of a file (or even the whole project) effectively means one thing: you can't mess up! If the changes you've made lately prove to be garbage, you can simply undo them in a few clicks. Knowing this should make you a lot more relaxed when working on important bits of a project.

Understanding What Happened

Every time you save a new version of your project, your VCS requires you to provide a short description of what was changed. Additionally (if it's a code / text file), you can see what exactly was changed in the file's content. This helps you understand how your project evolved between versions.

Backup

A side-effect of using a distributed VCS like Git is that it can act as a backup; every team member has a full-blown version of the project on his disk - including the project's complete history. Should your beloved central server break down (and your backup drives fail), all you need for recovery is one of your teammates' local Git repository.

What would you store in a version control system?

basic git command

git config Sets configuration values for your user name, email, gpg key, preferred diff algorithm, file formats and more. Example: git config --global user.name "My Name" git config --global user.email "user@domain.com" cat ~/.gitconfig [user] name = My Name email = user@domain.com

git init Initializes a git repository – creates the initial ‘.git’ directory in a new or in an existing project. Example: cd /home/user/my_new_git_folder/ git init

git clone Makes a Git repository copy from a remote source. Also adds the original location as a remote so you can fetch from it again and push to it if you have permissions. Example: git clone git@github.com:user/test.git

git add Adds files changes in your working directory to your index. Example: git add .

git rm Removes files from your index and your working directory so they will not be tracked. Example: git rm filename

git commit Takes all of the changes written in the index, creates a new commit object pointing to it and sets the branch to point to that new commit. Examples: git commit -m ‘committing added changes’ git commit -a -m ‘committing all changes, equals to git add and git commit’

git status Shows you the status of files in the index versus the working directory. It will list out files that are untracked (only in your working directory), modified (tracked but not yet updated in your index), and staged (added to your index and ready for committing). Example: git status # On branch master # # Initial commit # # Untracked files: # (use "git add <file>..." to include in what will be committed) # # README nothing added to commit but untracked files present (use "git add" to track)

git branch Lists existing branches, including remote branches if ‘-a’ is provided. Creates a new branch if a branch name is provided. Example: git branch -a * master remotes/origin/master

git checkout Checks out a different branch – switches branches by updating the index, working tree, and HEAD to reflect the chosen branch. Example: git checkout newbranch

git merge Merges one or more branches into your current branch and automatically creates a new commit if there are no conflicts. Example: git merge newbranchversion

git reset Resets your index and working directory to the state of your last commit. Example: git reset --hard HEAD

git stash Temporarily saves changes that you don’t want to commit immediately. You can apply the changes later. Example: git stash Saved working directory and index state "WIP on master: 84f241e first commit" HEAD is now at 84f241e first commit (To restore them type "git stash apply")

git tag Tags a specific commit with a simple, human readable handle that never moves. Example: git tag -a v1.0 -m 'this is version 1.0 tag'

git fetch Fetches all the objects from the remote repository that are not present in the local one. Example: git fetch origin

git pull Fetches the files from the remote repository and merges it with your local one. This command is equal to the git fetch and the git merge sequence. Example: git pull origin

git push Pushes all the modified local objects to the remote repository and advances its branches. Example: git push origin master

git remote Shows all the remote versions of your repository. Example: git remote origin