Python+OpenCV+Windows: Difference between revisions

From ICO wiki

Jump to navigationJump to search

How to use Python and OpenCV on Windows? |

No edit summary |

||

| (5 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[Category:Robootika]] | |||

'''What do you need?''' | '''What do you need?''' | ||

----- | ----- | ||

| Line 61: | Line 63: | ||

* | |||

*Get the [https://raw.githubusercontent.com/shantnu/Webcam-Face-Detect/master/haarcascade_frontalface_default.xml face recognition featureset]: | |||

<source lang="bash">wget https://raw.githubusercontent.com/shantnu/Webcam-Face-Detect/master/haarcascade_frontalface_default.xml</source> | |||

*Copy this into the same folder of your new project | *Copy this into the same folder of your new project | ||

*Change this line of your code to direct towards XML file | *Change this line of your code to direct towards XML file | ||

<source lang="python">cascPath = LinkToFile.xml</source> | <source lang="python">cascPath = "LinkToFile.xml"</source> | ||

*For example in my case it is: | *For example in my case it is: | ||

<source lang="python">cascPath = "face.xml"</source> | <source lang="python">cascPath = "face.xml"</source> | ||

----- | |||

'''Balltrack on localhost (http://127.0.0.1:5000/video_feed)''' | |||

<source lang="bash">wget https://github.com/egaia/python-pythonize-robots</source> | |||

I am still using the setting I've stated at the first. | |||

In the GitHub i've created two new files called camera.py and main.py. If you want to test out only the balltrack you should use ballFind.py or if you want to use the streaming part run main.py | |||

<source lang="python"> | |||

#!/usr/bin/env python | |||

# | |||

# Project: Video Streaming with Flask | |||

# Author: Log0 <im [dot] ckieric [at] gmail [dot] com> | |||

# Date: 2014/12/21 | |||

# Website: http://www.chioka.in/ | |||

# Description: | |||

# Modified to support streaming out with webcams, and not just raw JPEGs. | |||

# Most of the code credits to Miguel Grinberg, except that I made a small tweak. Thanks! | |||

# Credits: http://blog.miguelgrinberg.com/post/video-streaming-with-flask | |||

# | |||

# Usage: | |||

# 1. Install Python dependencies: cv2, flask. (wish that pip install works like a charm) | |||

# 2. Run "python main.py". | |||

# 3. Navigate the browser to the local webpage. | |||

from flask import Flask, render_template, Response | |||

from camera import VideoCamera | |||

app = Flask(__name__) | |||

@app.route('/') | |||

def index(): | |||

return render_template('index.html') | |||

def gen(camera): | |||

while True: | |||

frame = camera.get_frame() | |||

yield (b'--frame\r\n' | |||

b'Content-' | |||

b'Type: image/jpeg\r\n\r\n' + frame + b'\r\n\r\n') | |||

@app.route('/video_feed') | |||

def video_feed(): | |||

return Response(gen(VideoCamera()), | |||

mimetype='multipart/x-mixed-replace; boundary=frame') | |||

if __name__ == '__main__': | |||

app.run(host='0.0.0.0', debug=True) | |||

</source> | |||

<source lang="python"> | |||

# camera.py | |||

import cv2 | |||

import numpy as np | |||

import imutils | |||

import argparse | |||

from collections import deque | |||

class VideoCamera(object): | |||

ap = argparse.ArgumentParser() | |||

ap.add_argument("-b", "--buffer", type=int, default=64, | |||

help="max buffer size") | |||

args = vars(ap.parse_args()) | |||

ballLower = (5, 160, 160) | |||

ballUpper = (20, 255, 255) | |||

pts = deque(maxlen=args["buffer"]) | |||

def __init__(self): | |||

# Using OpenCV to capture from device 0. If you have trouble capturing | |||

# from a webcam, comment the line below out and use a video file | |||

# instead. | |||

self.video = cv2.VideoCapture(0) | |||

# If you decide to use video.mp4, you must have this file in the folder | |||

# as the main.py. | |||

# self.video = cv2.VideoCapture('video.mp4') | |||

def __del__(self): | |||

self.video.release() | |||

def get_frame(self): | |||

image = self.editFrame() | |||

# We are using Motion JPEG, but OpenCV defaults to capture raw images, | |||

# so we must encode it into JPEG in order to correctly display the | |||

# video stream. | |||

ret, jpeg = cv2.imencode('.jpg', image) | |||

return jpeg.tobytes() | |||

def editFrame(self): | |||

success, frame = self.video.read() | |||

#turn around | |||

frame = cv2.flip(frame, 1) | |||

frame = imutils.resize(frame, width=600) | |||

blurred = cv2.GaussianBlur(frame, (11, 11), 0) | |||

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV) | |||

# construct a mask for the ball color, then perform | |||

# a series of dilations and erosions to remove any small | |||

# blobs left in the mask | |||

mask = cv2.inRange(hsv, self.ballLower, self.ballUpper) | |||

mask = cv2.erode(mask, None, iterations=2) | |||

mask = cv2.dilate(mask, None, iterations=2) | |||

#find contours in the mask and initialize the current | |||

# (x, y) center of the ball | |||

cnts = cv2.findContours(mask.copy(), cv2.RETR_EXTERNAL, | |||

cv2.CHAIN_APPROX_SIMPLE)[-2] | |||

center = None | |||

#only proceed if at least one contour was found | |||

if len(cnts) > 0: | |||

# find the largest contour in the mask, then use | |||

# it to compute the minimum enclosing circle and | |||

# centroid | |||

c = max(cnts, key=cv2.contourArea) | |||

((x, y), radius) = cv2.minEnclosingCircle(c) | |||

M = cv2.moments(c) | |||

center = (int(M["m10"] / M["m00"]), int(M["m01"] / M["m00"])) | |||

#only proceed if the radius meets a minimum size | |||

if radius > 10: | |||

# draw the circle and centroid on the frame, | |||

# then update the list of tracked points | |||

cv2.circle(frame, (int(x), int(y)), int(radius), | |||

(0, 255, 255), 2) | |||

cv2.circle(frame, center, 5, (0, 0, 255), -1) | |||

# update the points queue | |||

self.pts.appendleft(center) | |||

# loop over the set of tracked points | |||

for i in xrange(1, len(self.pts)): | |||

# if either of the tracked points are None, ignore | |||

# them | |||

if self.pts[i - 1] is None or self.pts[i] is None: | |||

continue | |||

# otherwise, compute the thickness of the line and | |||

# draw the connecting lines | |||

thickness = int(np.sqrt(self.args["buffer"] / float(i + 1)) * 2.5) | |||

cv2.line(frame, self.pts[i - 1], self.pts[i], (0, 0, 255), thickness) | |||

return frame | |||

</source> | |||

Latest revision as of 12:14, 20 February 2016

What do you need?

- Python 2.7 (I recommend THIS) => because it has many packages preinstalled.

- OpenCV 2.4.11 (You can get this from here)

- Windows (tested on Windows10, probably works elsewhere)

What do I do now?

- Install all the .EXE files ( this version of python takes long time compared to the original one, make some coffee meanwhile)

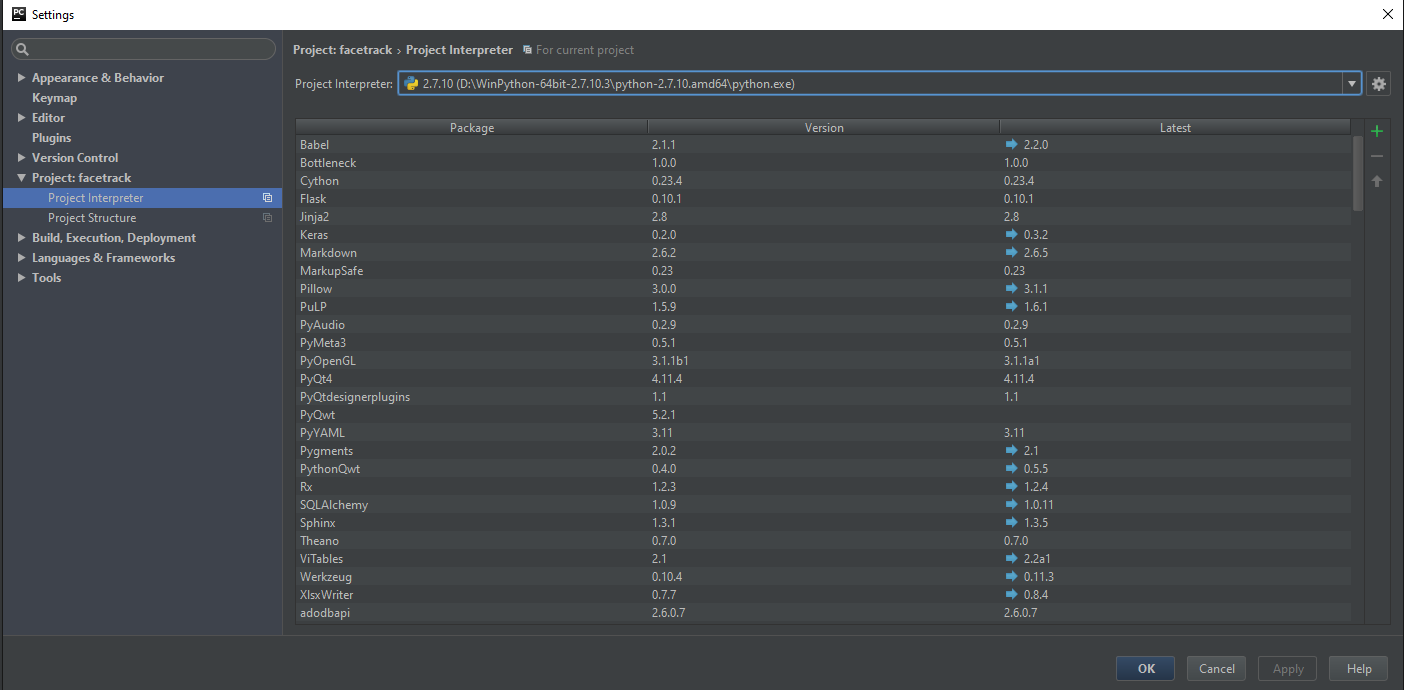

- Navigate to these folders -> opencv\build\python\2.7(select your OS folder ( x64 for 64bit, x86 for 32bit)) & WinPython-64bit-2.7.10.3\python-2.7.10.amd64

- CTRL + C (or COPY) the file called cv2.pyd

- Paste the file into folders DLLs and Lib\site-packages

How do I set up PyCharm to use my new Interpreter?

- Open PyCharm and create new project

- Select File->Settings->Project->Project Interpreter-> GEAR ICON -> Add Local -> Navigate to Winpython\python\python.exe and select it

How do test if everything works?

- Copy following code:

import cv2

import sys

cascPath = sys.argv[1]

faceCascade = cv2.CascadeClassifier(cascPath)

video_capture = cv2.VideoCapture(0)

while True:

# Capture frame-by-frame

ret, frame = video_capture.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = faceCascade.detectMultiScale(

gray,

scaleFactor=1.1,

minNeighbors=5,

minSize=(30, 30),

flags=cv2.cv.CV_HAAR_SCALE_IMAGE

)

# Draw a rectangle around the faces

for (x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

# Display the resulting frame

cv2.imshow('Video', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything is done, release the capture

video_capture.release()

cv2.destroyAllWindows()

- Get the face recognition featureset:

wget https://raw.githubusercontent.com/shantnu/Webcam-Face-Detect/master/haarcascade_frontalface_default.xml

- Copy this into the same folder of your new project

- Change this line of your code to direct towards XML file

cascPath = "LinkToFile.xml"

- For example in my case it is:

cascPath = "face.xml"

Balltrack on localhost (http://127.0.0.1:5000/video_feed)

wget https://github.com/egaia/python-pythonize-robots

I am still using the setting I've stated at the first. In the GitHub i've created two new files called camera.py and main.py. If you want to test out only the balltrack you should use ballFind.py or if you want to use the streaming part run main.py

#!/usr/bin/env python

#

# Project: Video Streaming with Flask

# Author: Log0 <im [dot] ckieric [at] gmail [dot] com>

# Date: 2014/12/21

# Website: http://www.chioka.in/

# Description:

# Modified to support streaming out with webcams, and not just raw JPEGs.

# Most of the code credits to Miguel Grinberg, except that I made a small tweak. Thanks!

# Credits: http://blog.miguelgrinberg.com/post/video-streaming-with-flask

#

# Usage:

# 1. Install Python dependencies: cv2, flask. (wish that pip install works like a charm)

# 2. Run "python main.py".

# 3. Navigate the browser to the local webpage.

from flask import Flask, render_template, Response

from camera import VideoCamera

app = Flask(__name__)

@app.route('/')

def index():

return render_template('index.html')

def gen(camera):

while True:

frame = camera.get_frame()

yield (b'--frame\r\n'

b'Content-'

b'Type: image/jpeg\r\n\r\n' + frame + b'\r\n\r\n')

@app.route('/video_feed')

def video_feed():

return Response(gen(VideoCamera()),

mimetype='multipart/x-mixed-replace; boundary=frame')

if __name__ == '__main__':

app.run(host='0.0.0.0', debug=True)

# camera.py

import cv2

import numpy as np

import imutils

import argparse

from collections import deque

class VideoCamera(object):

ap = argparse.ArgumentParser()

ap.add_argument("-b", "--buffer", type=int, default=64,

help="max buffer size")

args = vars(ap.parse_args())

ballLower = (5, 160, 160)

ballUpper = (20, 255, 255)

pts = deque(maxlen=args["buffer"])

def __init__(self):

# Using OpenCV to capture from device 0. If you have trouble capturing

# from a webcam, comment the line below out and use a video file

# instead.

self.video = cv2.VideoCapture(0)

# If you decide to use video.mp4, you must have this file in the folder

# as the main.py.

# self.video = cv2.VideoCapture('video.mp4')

def __del__(self):

self.video.release()

def get_frame(self):

image = self.editFrame()

# We are using Motion JPEG, but OpenCV defaults to capture raw images,

# so we must encode it into JPEG in order to correctly display the

# video stream.

ret, jpeg = cv2.imencode('.jpg', image)

return jpeg.tobytes()

def editFrame(self):

success, frame = self.video.read()

#turn around

frame = cv2.flip(frame, 1)

frame = imutils.resize(frame, width=600)

blurred = cv2.GaussianBlur(frame, (11, 11), 0)

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

# construct a mask for the ball color, then perform

# a series of dilations and erosions to remove any small

# blobs left in the mask

mask = cv2.inRange(hsv, self.ballLower, self.ballUpper)

mask = cv2.erode(mask, None, iterations=2)

mask = cv2.dilate(mask, None, iterations=2)

#find contours in the mask and initialize the current

# (x, y) center of the ball

cnts = cv2.findContours(mask.copy(), cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)[-2]

center = None

#only proceed if at least one contour was found

if len(cnts) > 0:

# find the largest contour in the mask, then use

# it to compute the minimum enclosing circle and

# centroid

c = max(cnts, key=cv2.contourArea)

((x, y), radius) = cv2.minEnclosingCircle(c)

M = cv2.moments(c)

center = (int(M["m10"] / M["m00"]), int(M["m01"] / M["m00"]))

#only proceed if the radius meets a minimum size

if radius > 10:

# draw the circle and centroid on the frame,

# then update the list of tracked points

cv2.circle(frame, (int(x), int(y)), int(radius),

(0, 255, 255), 2)

cv2.circle(frame, center, 5, (0, 0, 255), -1)

# update the points queue

self.pts.appendleft(center)

# loop over the set of tracked points

for i in xrange(1, len(self.pts)):

# if either of the tracked points are None, ignore

# them

if self.pts[i - 1] is None or self.pts[i] is None:

continue

# otherwise, compute the thickness of the line and

# draw the connecting lines

thickness = int(np.sqrt(self.args["buffer"] / float(i + 1)) * 2.5)

cv2.line(frame, self.pts[i - 1], self.pts[i], (0, 0, 255), thickness)

return frame